Learn how to easily migrate a GNU/Linux machine from Amazon Web Services (AWS) to Jotelulu.

There might come a time when you want to migrate a machine from your previous infrastructure in order to centralise everything. To do this, you will need to extract the server from its previous location, in this case, Amazon Web Services (AWS), and move it to another location, such as Jotelulu.

In this brief tutorial, we will explain, step by step, just how to carry out this process.

How to migrate a GNU/Linux server from AWS to Jotelulu

Before you get started

To successfully complete this tutorial and migrate a server from Amazon Web Services (AWS) to your Jotelulu Servers subscription, you will need:

- To be registered as a Partner on the Jotelulu platform and have logged in.

- To have administrator permissions for your company or a Servers subscription.

- To have an AWS subscription with at least one server that you can export.

Limitations:

- AWS does not allow the exporting of images that contain third-party software provided by AWS. As a result, it is not possible to export Windows or SQL Server images or any image created using an image in the AWS Marketplace.

- It is not possible to export images with EBS-encrypted snapshots in the block device mapping.

- You can only export EBS data volumes that are specified in the block device mapping.

- You cannot export EBS volumes attached after instance launch.

- You cannot export an image from Amazon EC2 if you have shared it from another AWS account.

- You cannot have multiple export image tasks in progress for the same AMI at the same time.

- By default, you cannot have more than 5 conversion tasks per region in progress at the same time. This limit is adjustable up to 20.

- You cannot export VMs with volumes greater than 1 TB.

Preparing the GNU/Linux machine

Before starting the migration process, you will need to prepare the server for migration by completing each of the following steps:

- Enable SSH (Secure Shell) to allow remote access.

- Adjust any firewall settings on your machine to allow remote SSH sessions (e.g., iptables).

- Create an additional user (non-root) to use during SSH sessions (e.g., create a Jotelulu user).

- Check that the VM uses GRUB (Grand Unified Bootloader), GRUB Legacy or GRUB 2 as a boot loader.

- Check that the GNU/Linux server uses one of the following root file systems to avoid any issues: EXT2, EXT3, EXT4, Btrfs, JFS, or XFS.

Part 1 – Installing AWS CLI on your local device

Once you have prepared the VM that you wish to export, the next thing you need to do is set up the AWS Command Line Interface on the system that you are using to launch the migration (usually your local device).

AWS CLI is the tool that we will use to send commands to the server.

First, you will need to go to the “AWS Command Line Interface” page on the Amazon website. If you are using a Windows device, download the application for either 32 bits or 64 bits.

On the other hand, if you are working on a GNU/Linux device, you should download and install the Python and Pip packages.

For this tutorial, we will be using Microsoft Windows and we will follow the process for that operting system.

Part 1 – Download and install the appropriate version of AWS CLI

Once the download is complete, run the file as an administrator. The installation process is very simple and requires little more than repeatedly clicking “Next”.

Once you have installed the application, you will need to add it to the Windows folder so that you can use the commands from any system path.

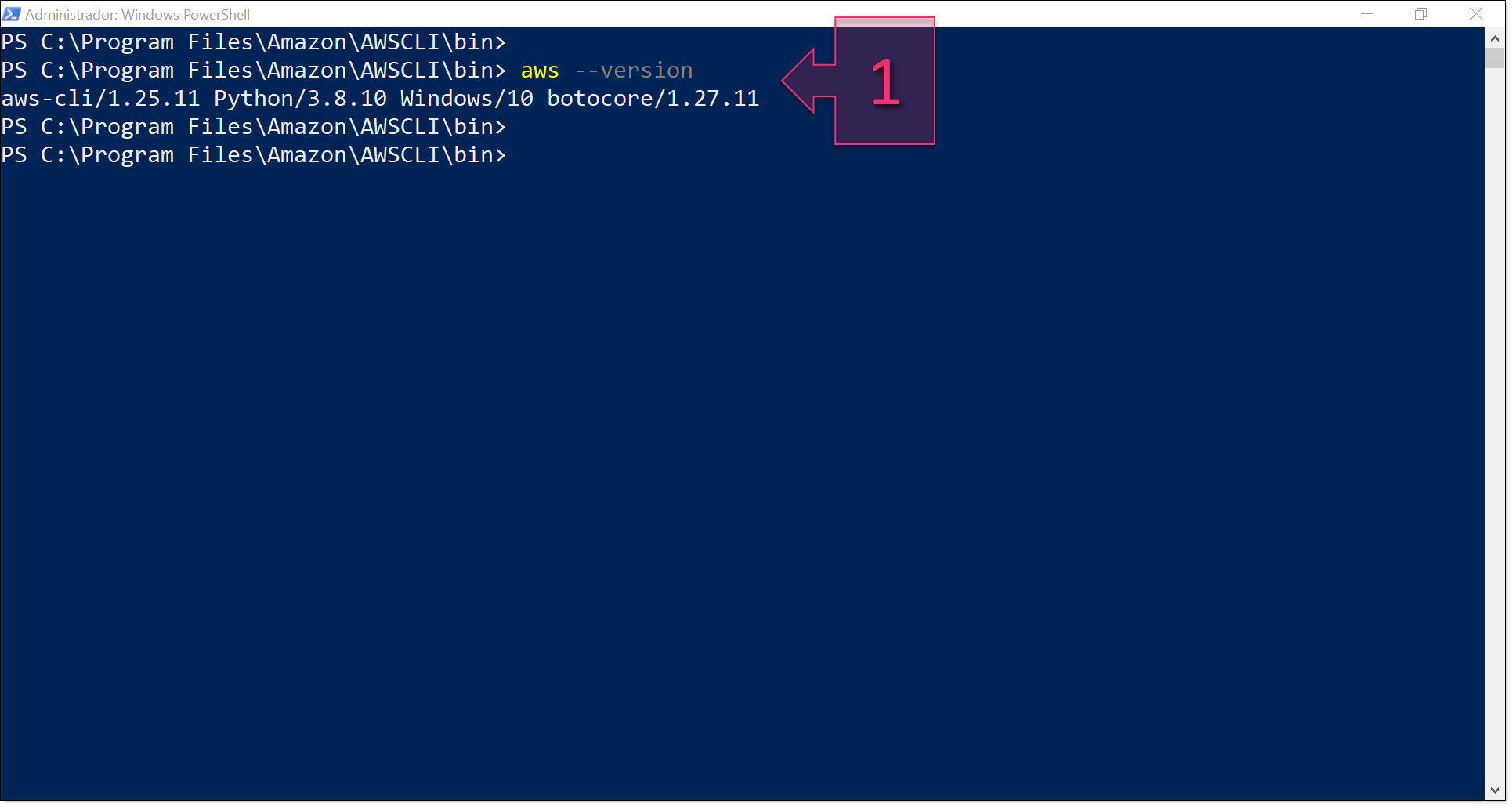

To check that everything has been done correctly and that you can use AWS CLI commands in the command prompt, open the command prompt and run the command “aws –version” (1). The prompt will return the version of the program that is installed on the system.

Part 1 – Check the version of AWS CLI

Once you have checked that everything is working correctly, it is time to move on to the next part of the process.

Part 2 – Preparing the AWS S3

The next step involves deploying an AWS S3 that should be in the same region as the VM that you wish to export. If, for example, you have a server hosted in Ireland (eu-west-1), the S3 should also be deployed in Ireland (eu-west-1).

There are multiple ways that you can deploy the S3. You can use the AWS CLI, Terraform or the web platform. In this tutorial, we are going to use the web platform since it is the simplest method.

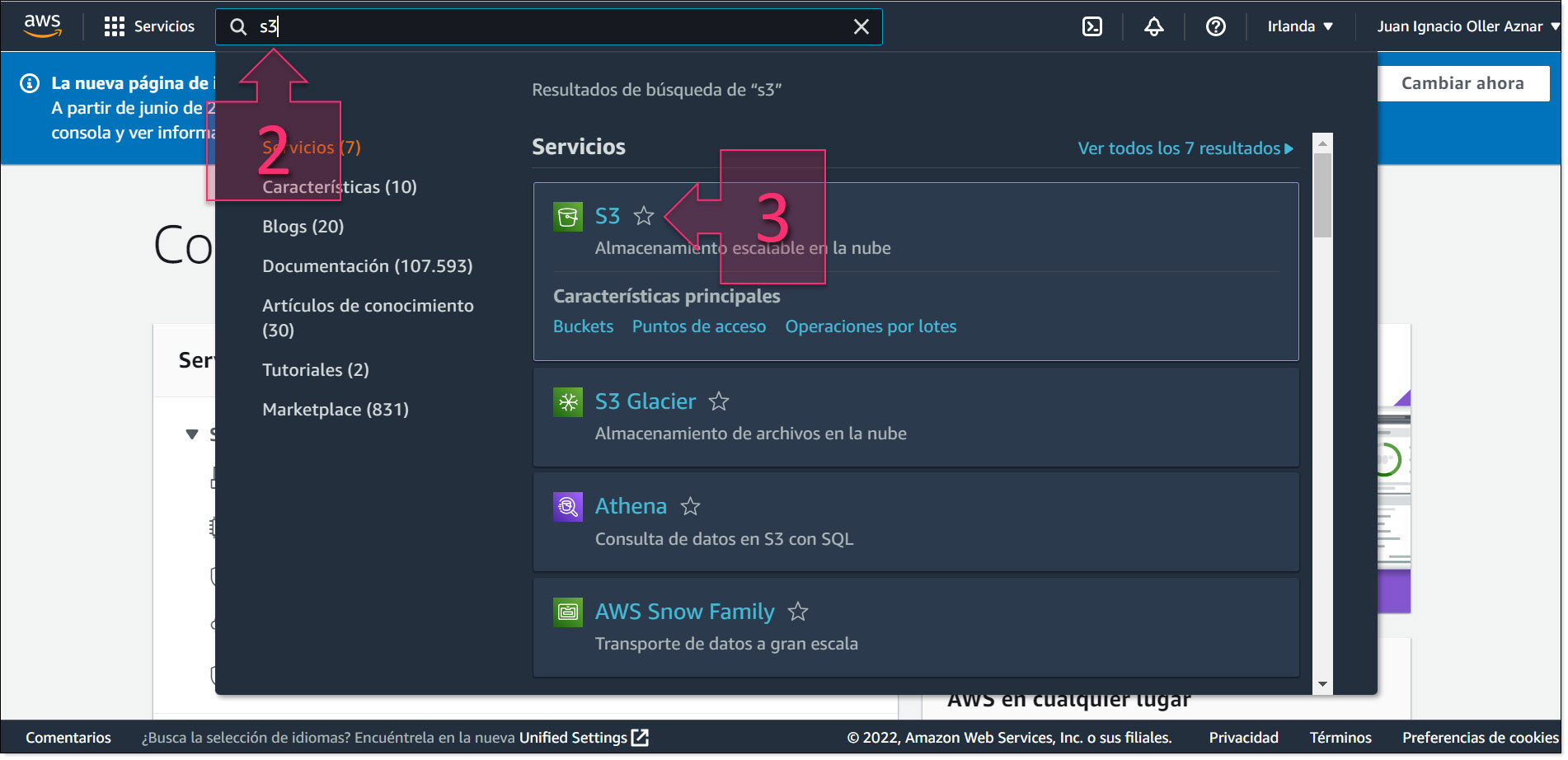

At the top of the AWS management console, you will find the search bar. Type in “S3″ (2) and from the results that appear, click on “S3” (3).

Part 2 – Search for S3 on the AWS management console

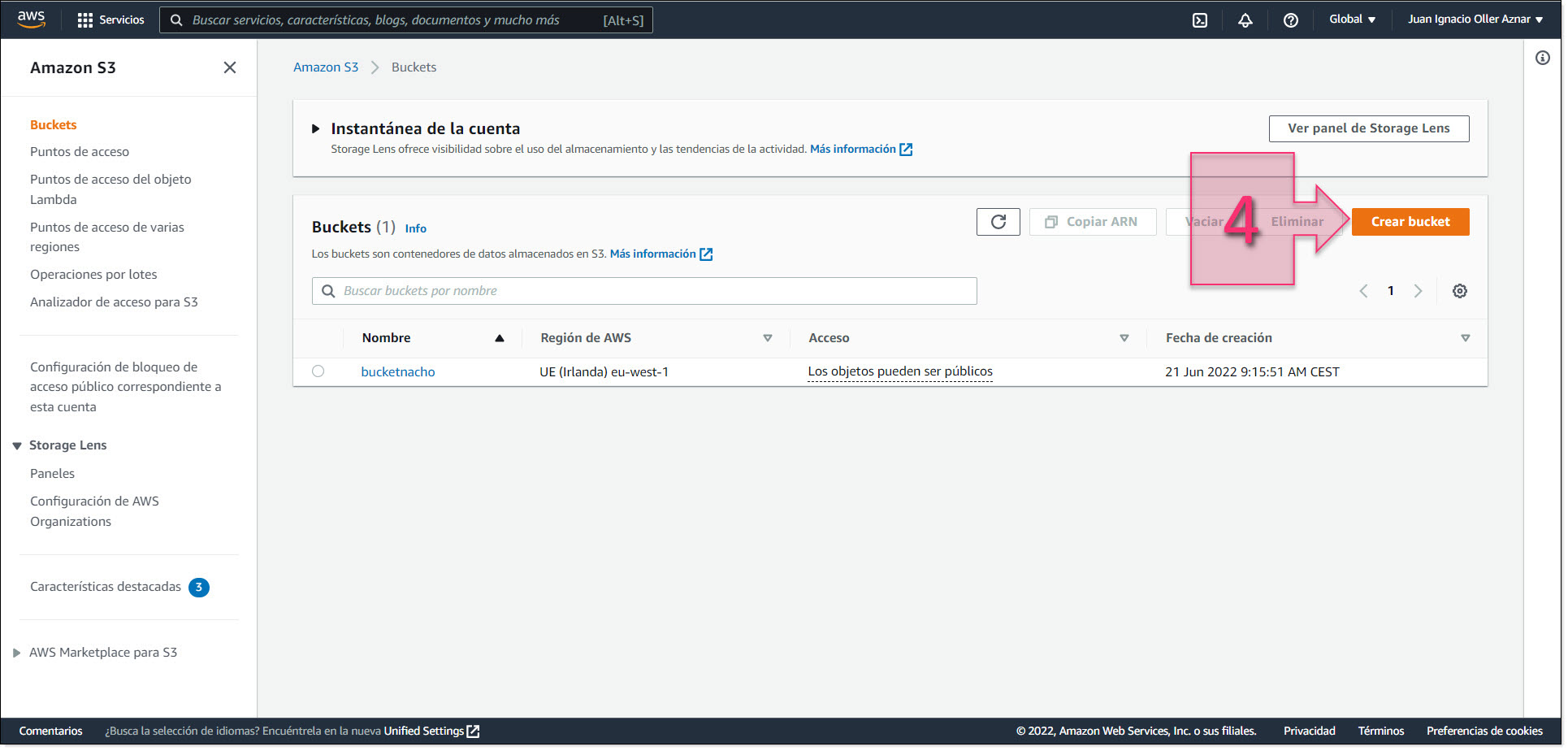

Once you have loaded the S3 management console, click on the “Create Bucket” button in the top right of the screen (4).

Part 2 – Click on “Create Bucket” in the S3 management console

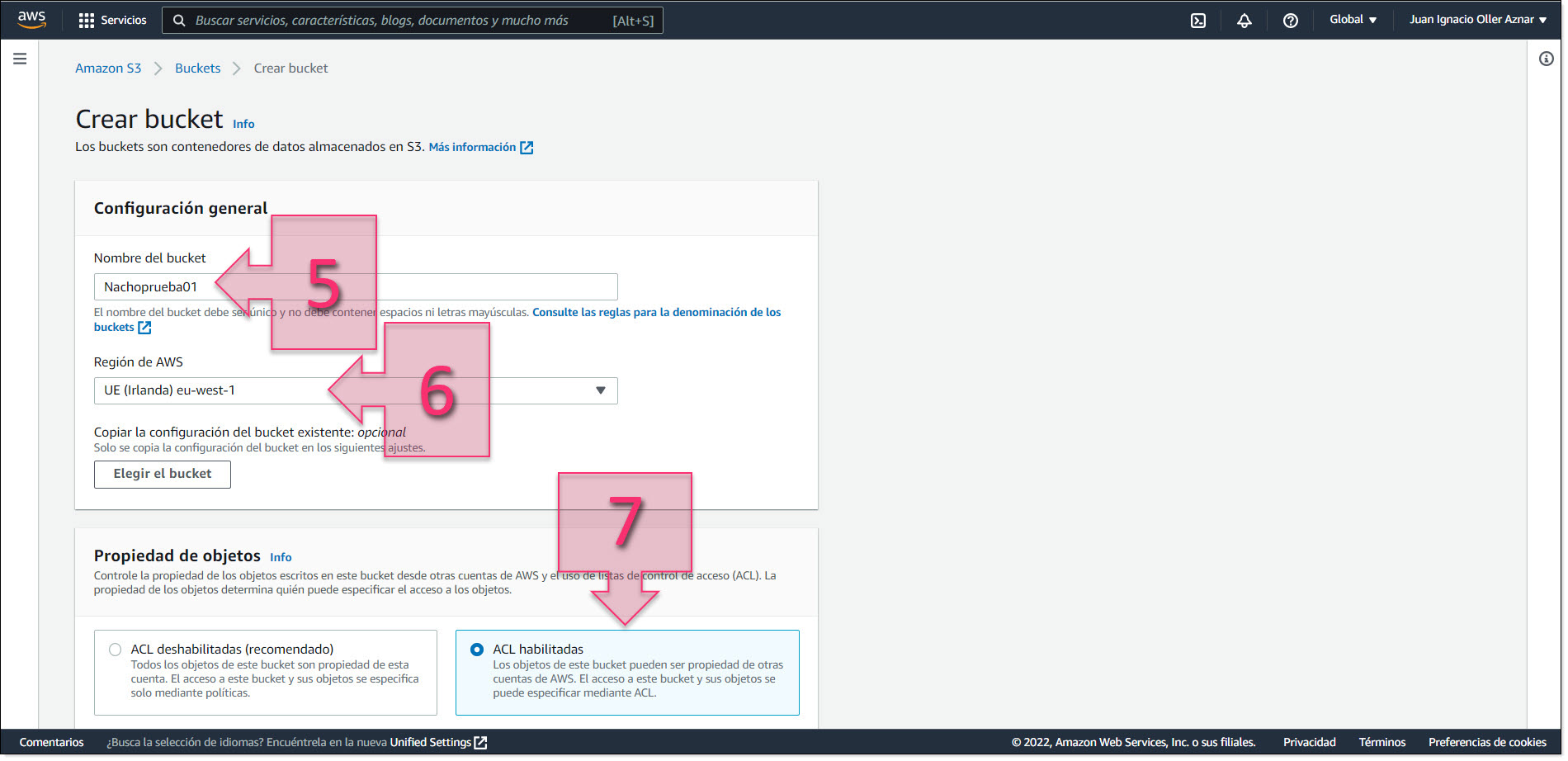

On the “Create Bucket” screen, you will need to enter a Bucket name (5) and choose Region where it will be deployed (6), which as we mentioned before, must be the same as the VM that you intend to export.

Under “Object Properties”, you should check “ACL enabled” (7)

Part 2 – Enter a name and region for the new S3 bucket

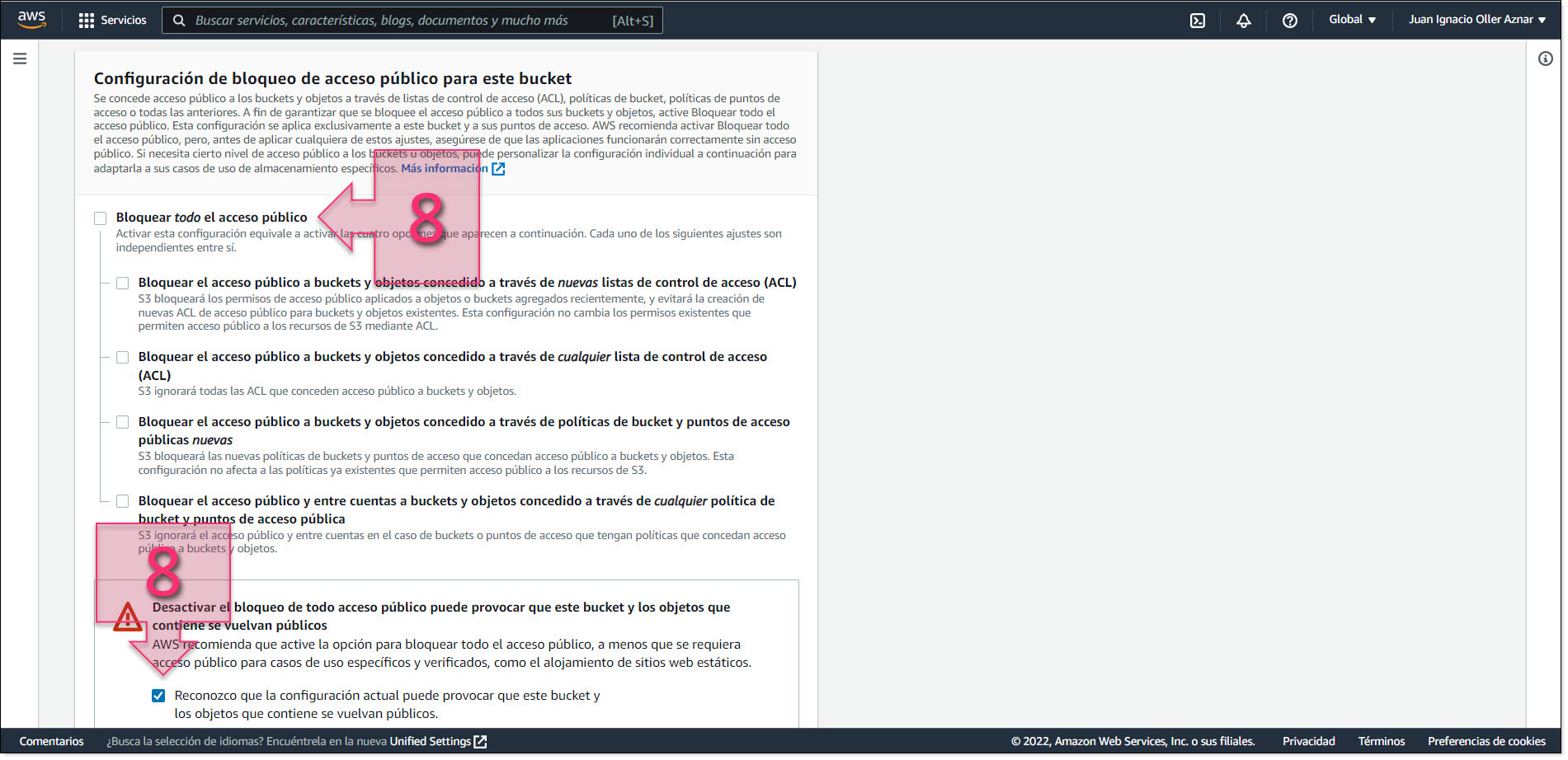

Under “Bucket settings for Block Public Access”, untick the box named “Block all public access” and accept the warning message that appears below (8).

Part 2 – Uncheck the box marked “Block all public access”

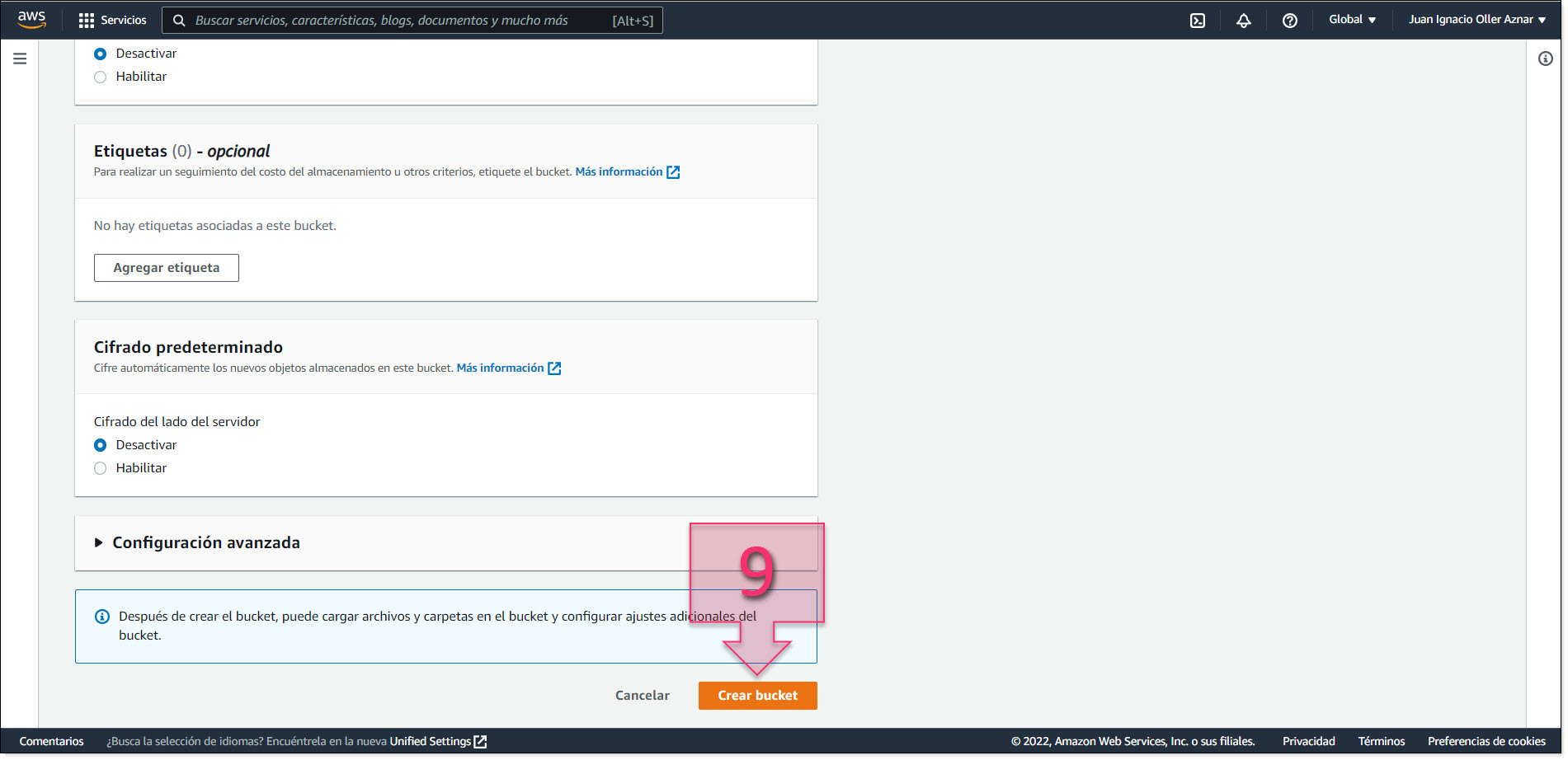

The rest of the settings can be left as they are by default, so all you have to do now is click on “Create Bucket” (9).

Part 2 – Leave the remaining parameters with their default settings and create the bucket

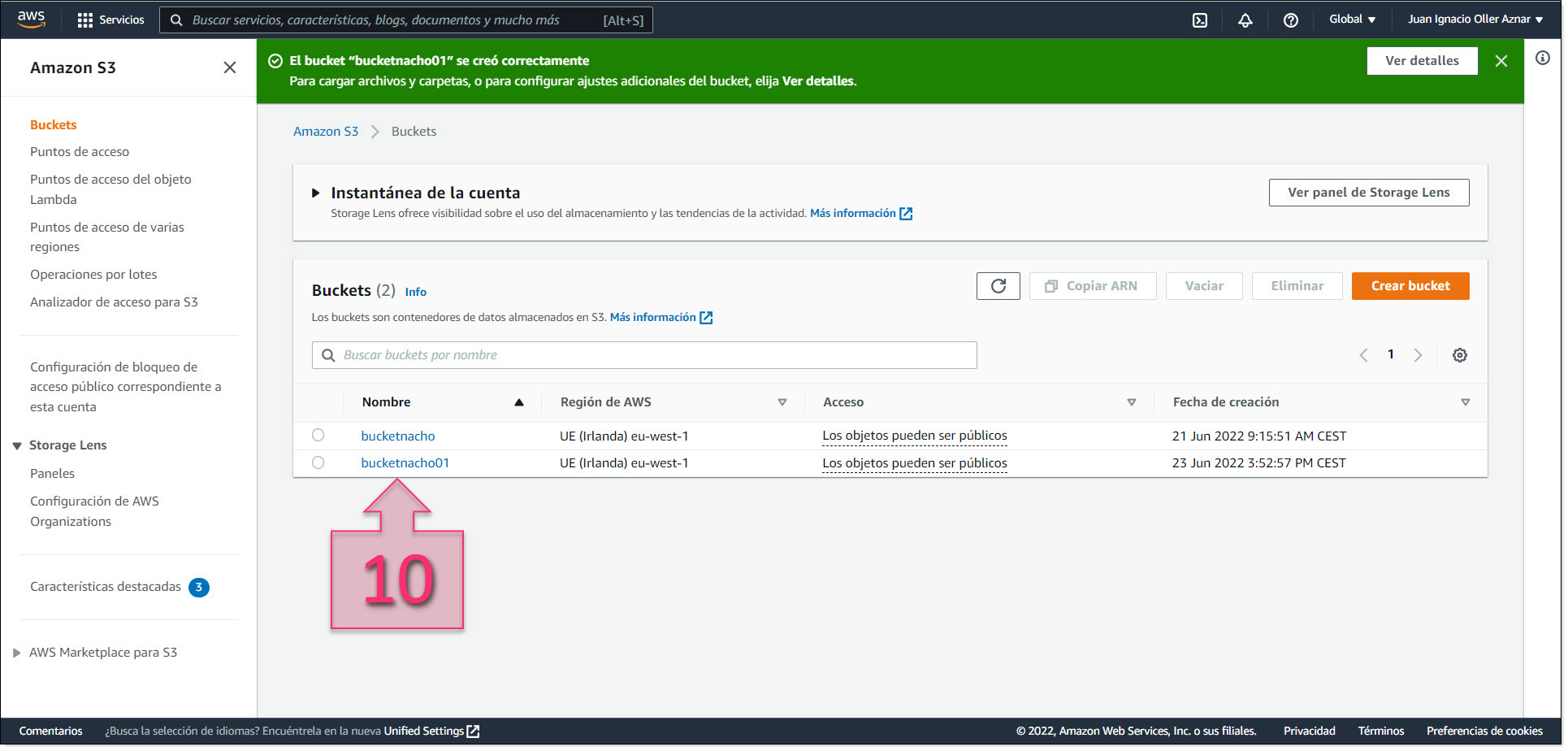

At this point, you will have to wait a moment while your new S3 bucket is deployed. Once ready, you will need to click on the name of the bucket (10) to make some final changes to the settings.

Part 2 – Click on the bucket name in the list

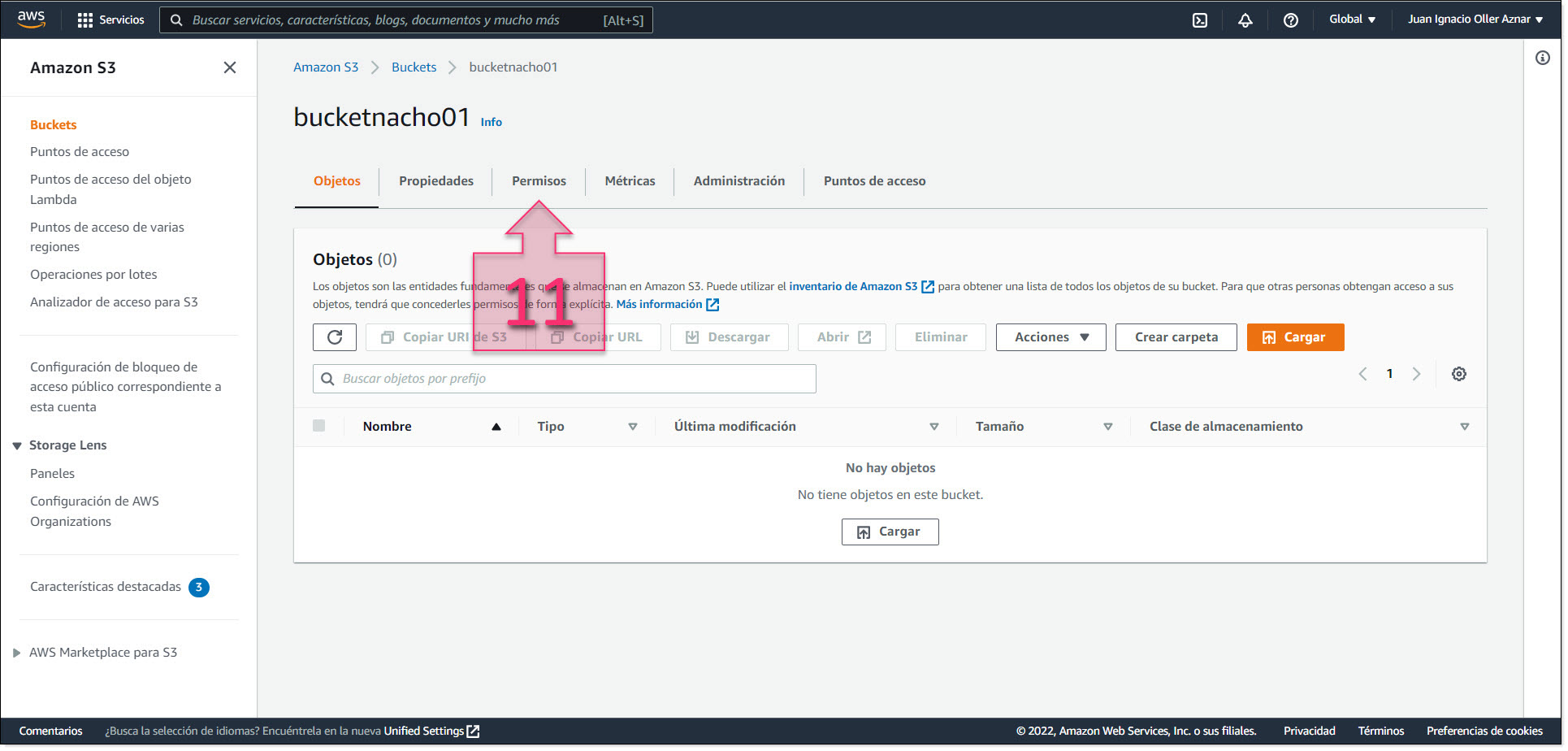

Next, you will need to configure the access permissions. Click on the “Permissions” tab (11).

Part 2 – Click on the “Permissions” tab

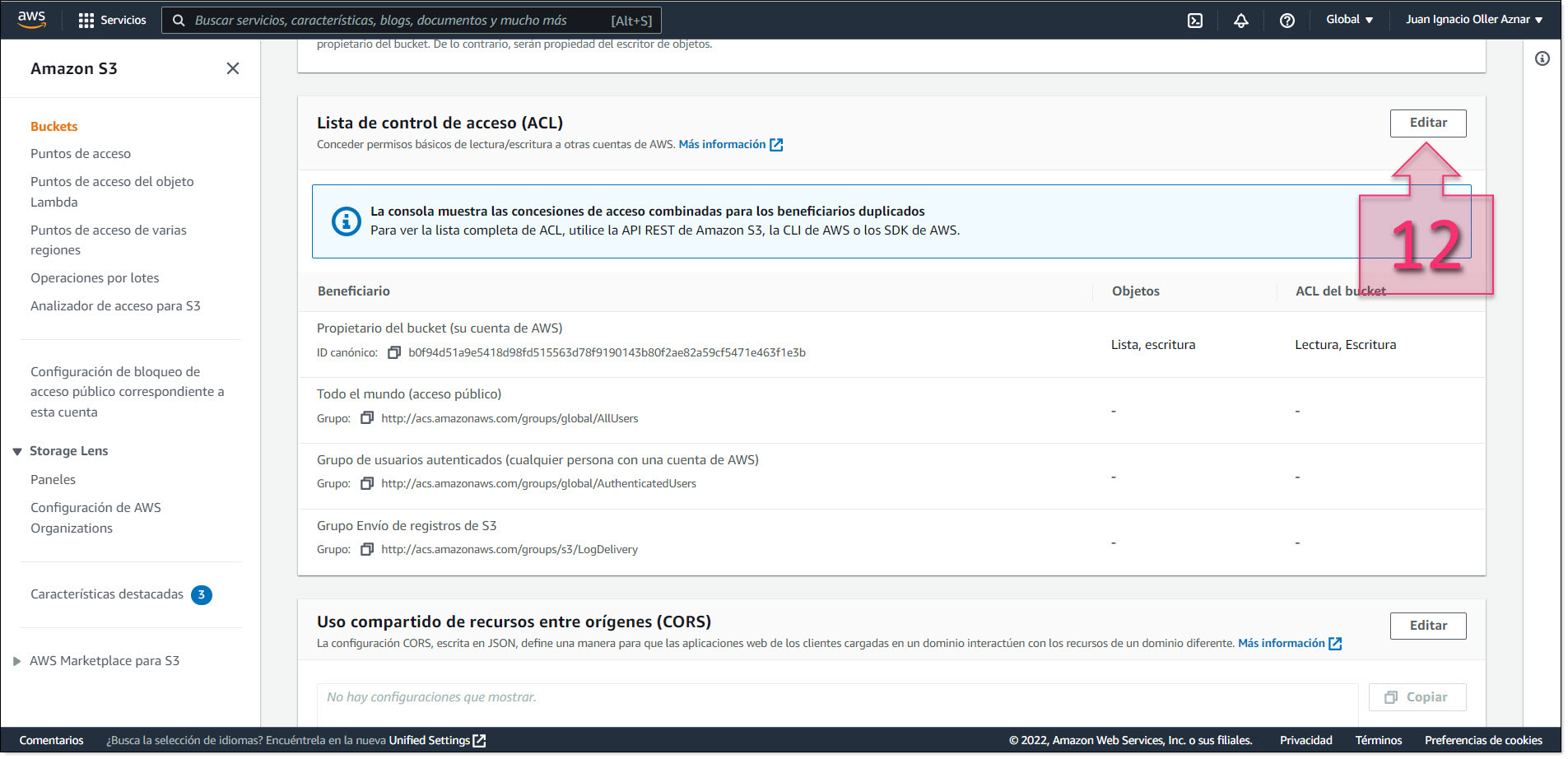

Then, scroll down to the “Access Control List” (ACL) and click on “Edit” (12).

Part 2 – Editing the Access Control List

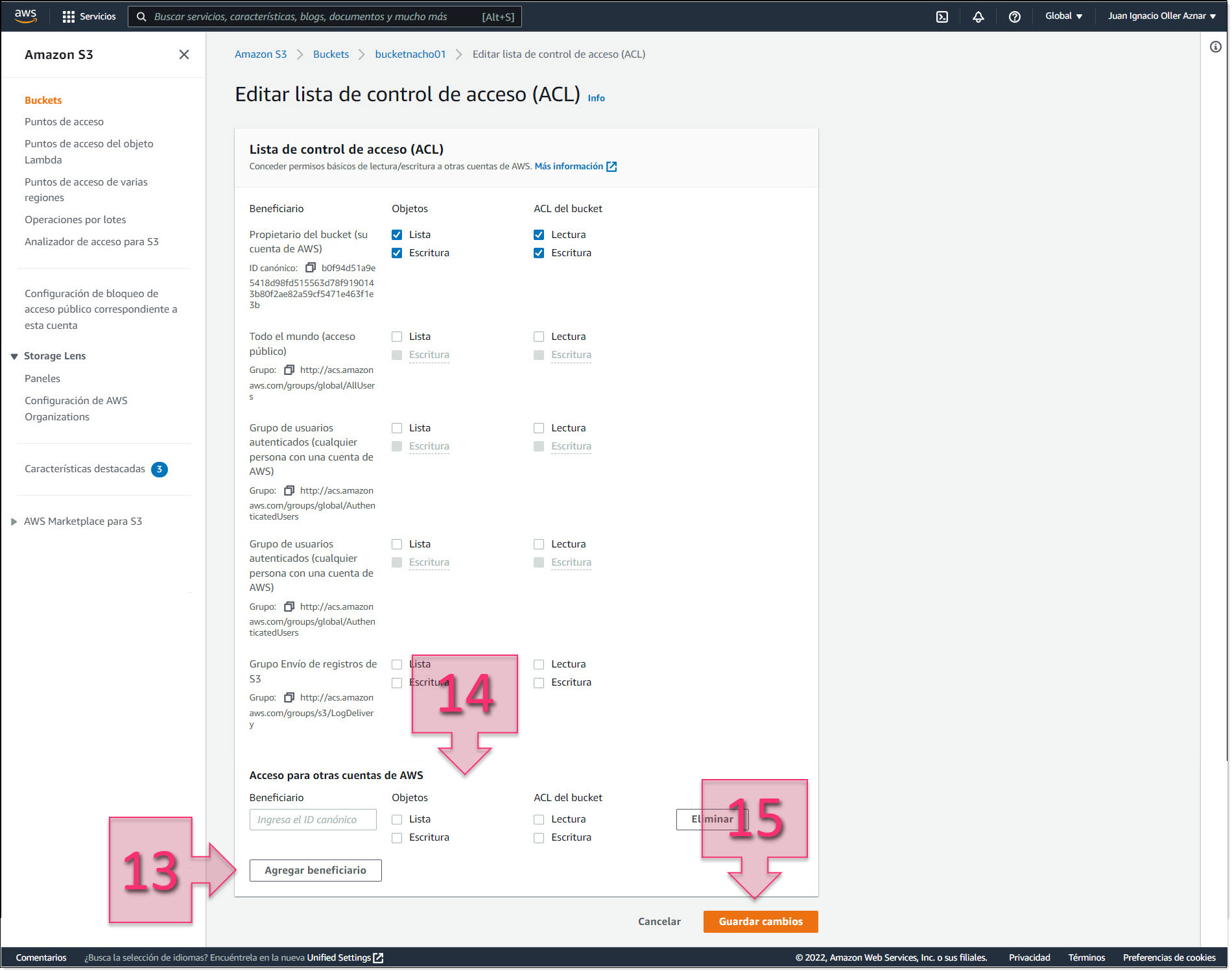

In the ACL, scroll down and click on “Add Grantee” (13). At this point, you need to enter “c4d8eabf8db69dbe46bfe0e517100c554f01200b104d59cd408e777ba442a322”, which works for Spain and Portugal, and mark the Read and Write checkboxes (14).

Once added, click on “Save Changes” (15).

Part 2 – Edit the ACL to add new access

The S3 bucket is now ready and we can move on to the next step.

Part 3 – Exporting the VM from AMS

The first thing you need to do for this step is to locate the ID of the instance (VM) you wish to migrate.

To do this, go to “EC2” (Elastic Cloud Computing), type “EC2” in the search bar (16) and click on “EC2: Virtual Servers in the Cloud” (17).

Part 3 – Search for EC2 under Services

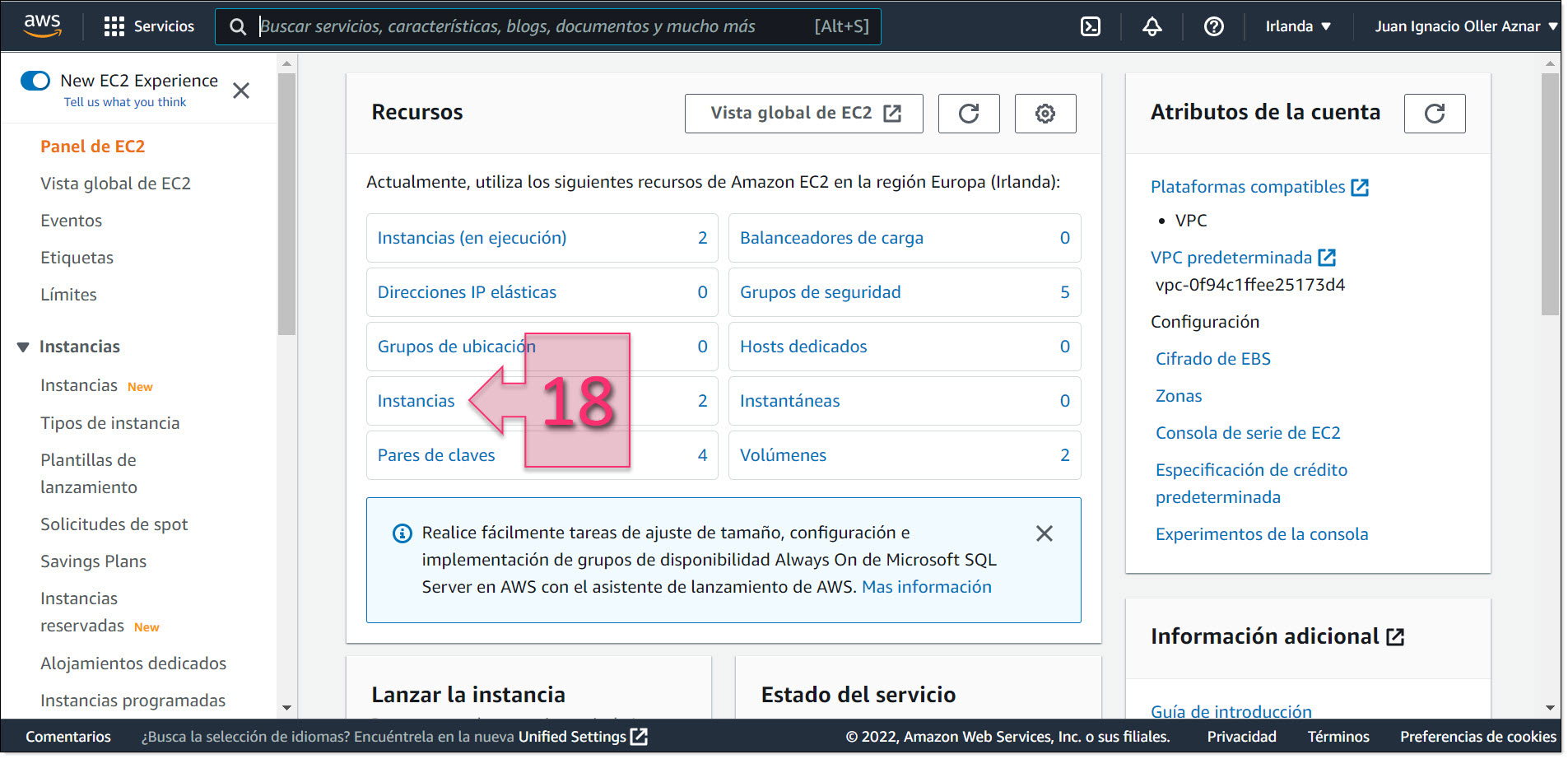

On the left-hand side of the EC2 page, click on “Instances” (18) to see all instances.

Part 3 – The Instances page on AWS

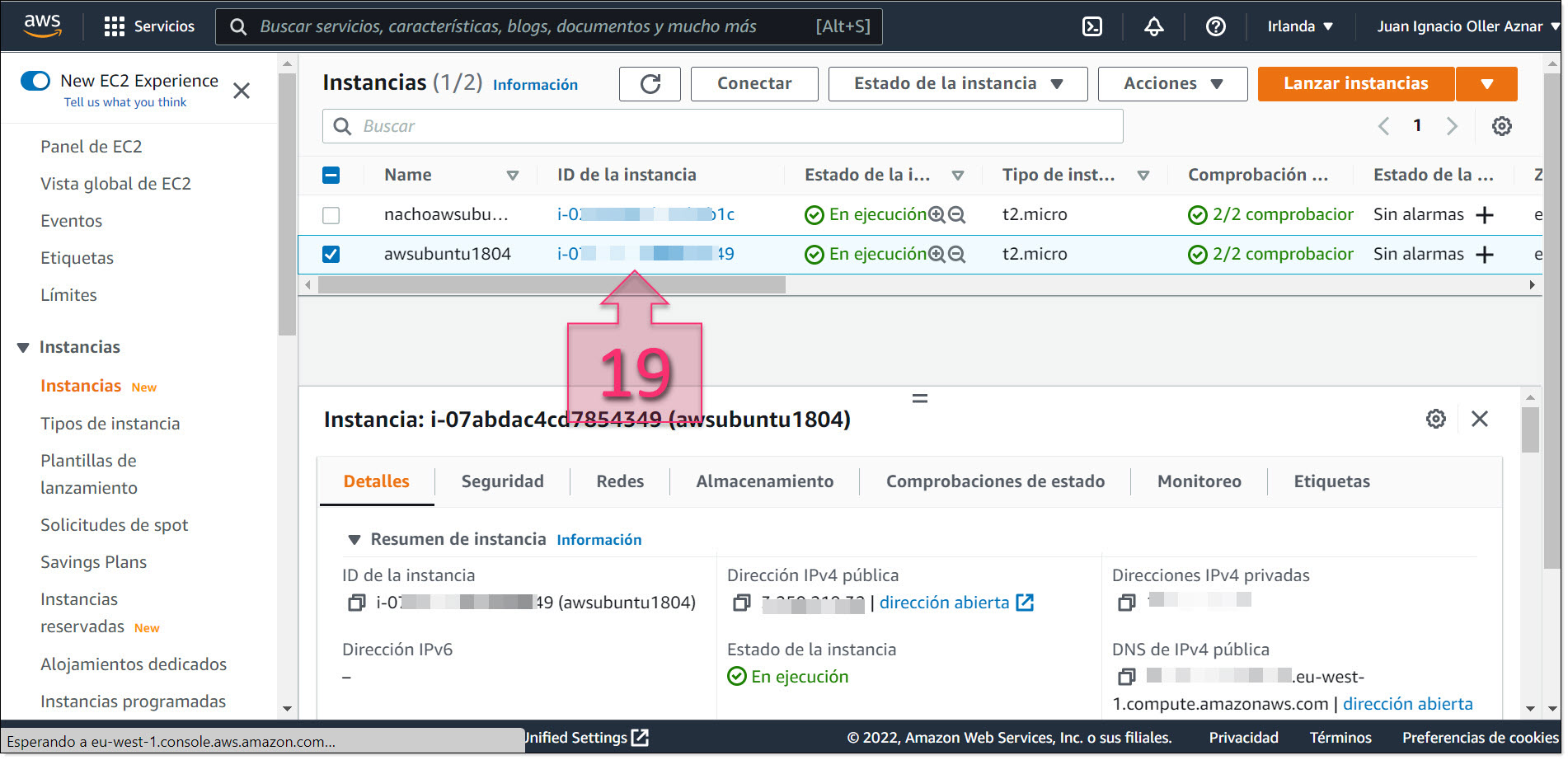

Find the instance that you want to migrate from this list and copy the Instance ID (19). Make a note of this ID for use later on.

Part 3 – Copy the Instance ID for the instance you wish to migrate

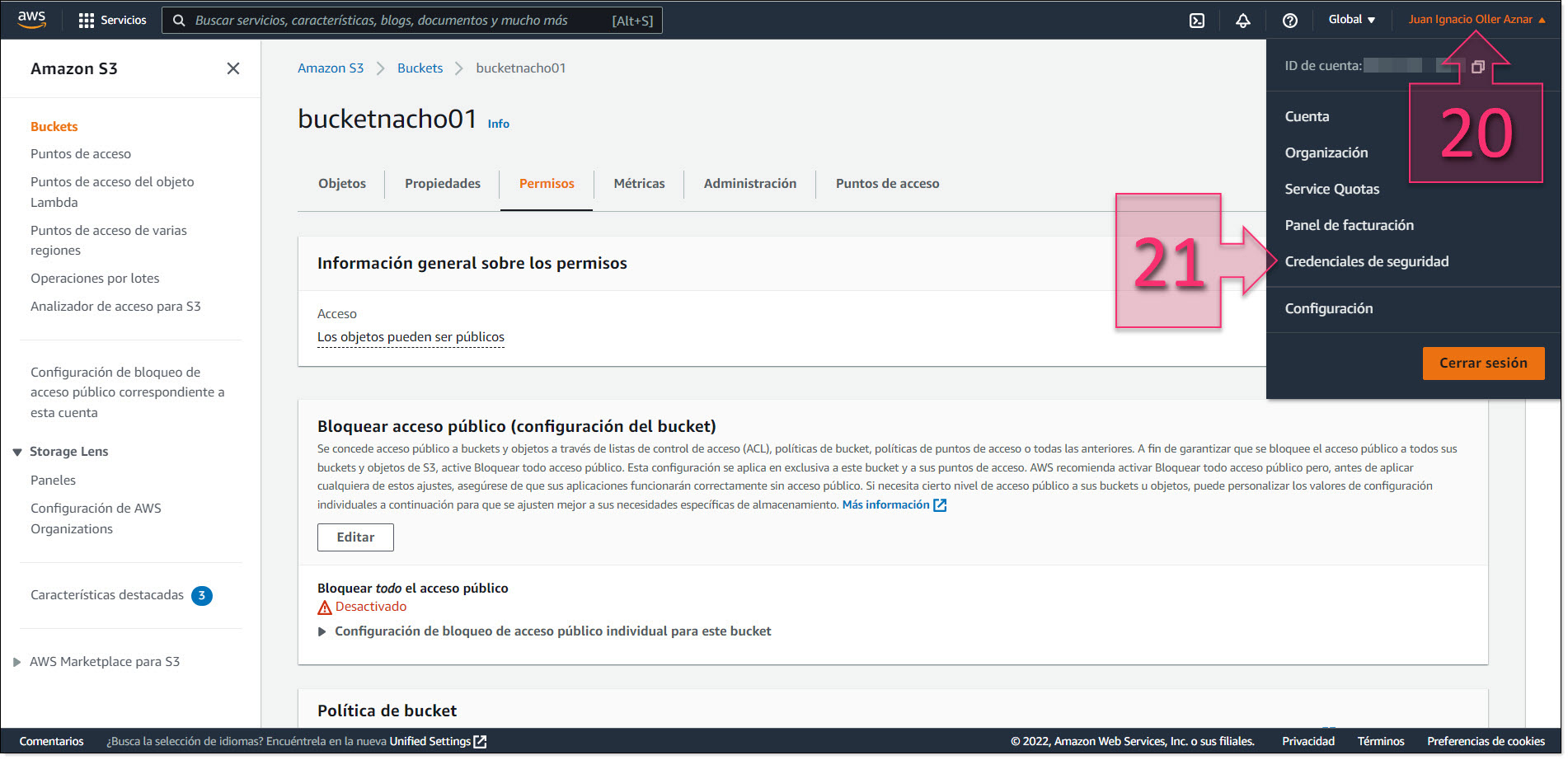

Part 3 – Open the Security Credentials section on AWS

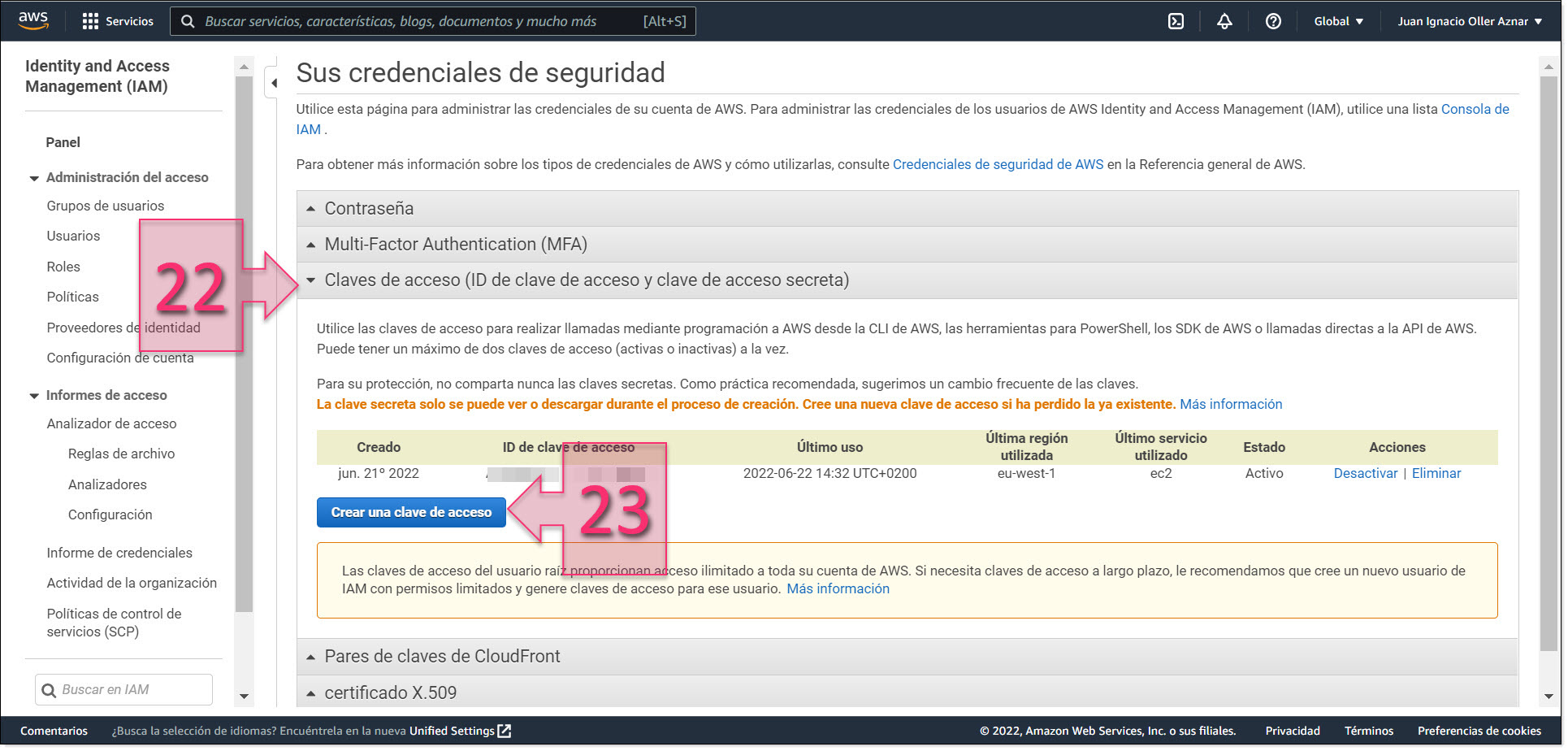

Here, expand the “Access Keys (access key ID and secret access key)” section (22)

In this section, you will need to create new keys for the connection. Click on “Create new access key” (23).

Part 3 – Create new access keys

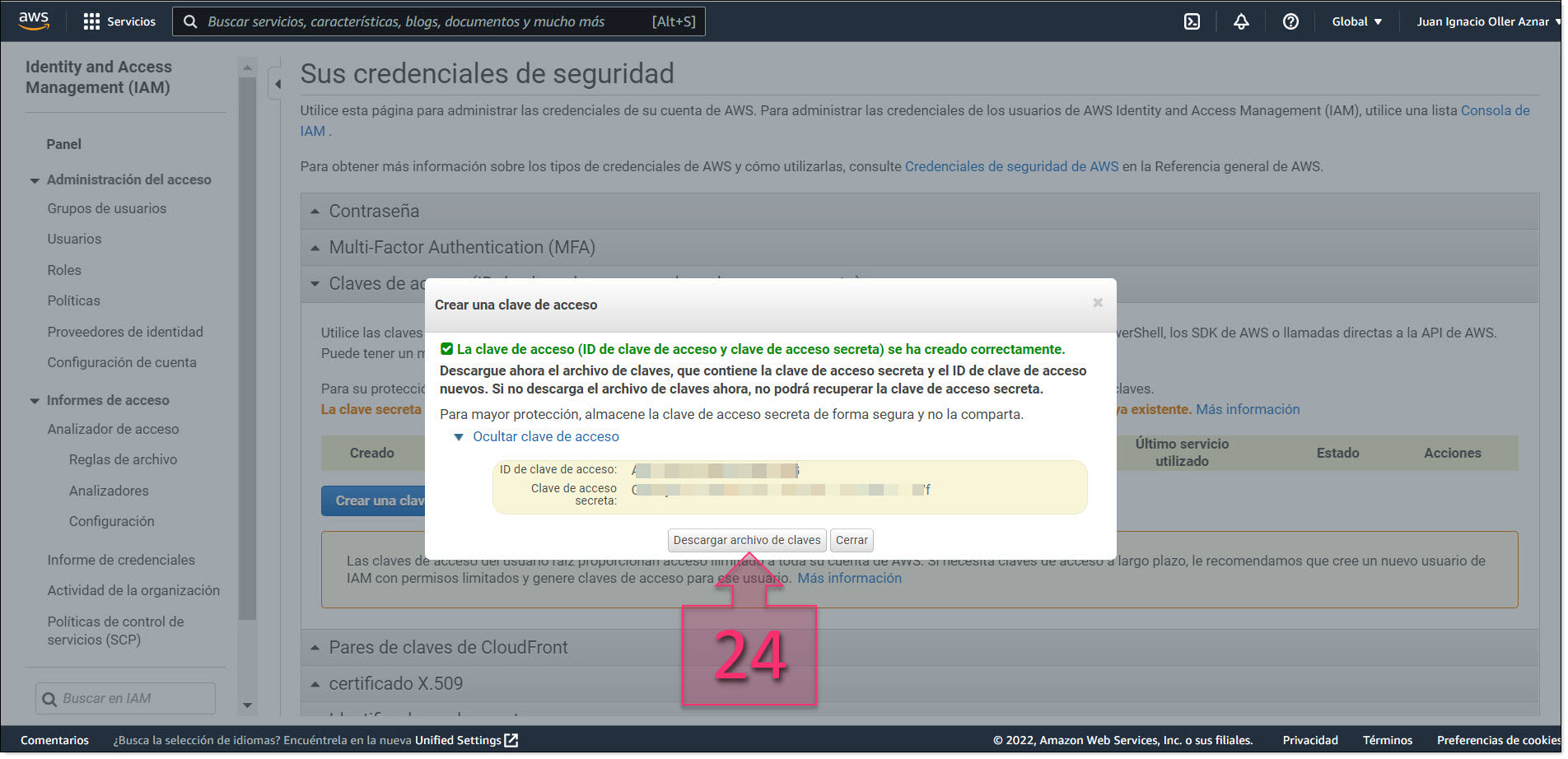

A window will appear showing the “Access key ID” and “Secret access key”.

At this point, you need to do two things. First, copy these keys as you will need them later in PowerShell. Secondly, click on “Download key file” (24) and save it on your device.

Part 3 – Save your newly generated keys

Now that you have the connection details, you are ready to run PowerShell (which should be run as an administrator).

First, open a text editor, such as Notepad++ or gVim and create a file named “file.json” with the following text:

{

“DiskImageFormat”: “VHD”,

“S3Bucket”: “bucketnacho01”,

“S3Prefix”: “vms/”

}

Where:

- “DiskImageFormat” is the format that the server will be exported as, which in this case will be VHD to be imported to Jotelulu.

- “S3Bucket” is the bucket name that you have created and is what the VHD instance will be called when exported.

- “S3Prefix” is the folder generated to store the exported image.

Once you have created and saved this file on your device, make a note of where it is saved for use in later commands.

NOTE: For GNU/Linux systems, you will need to apply read permissions to this file.

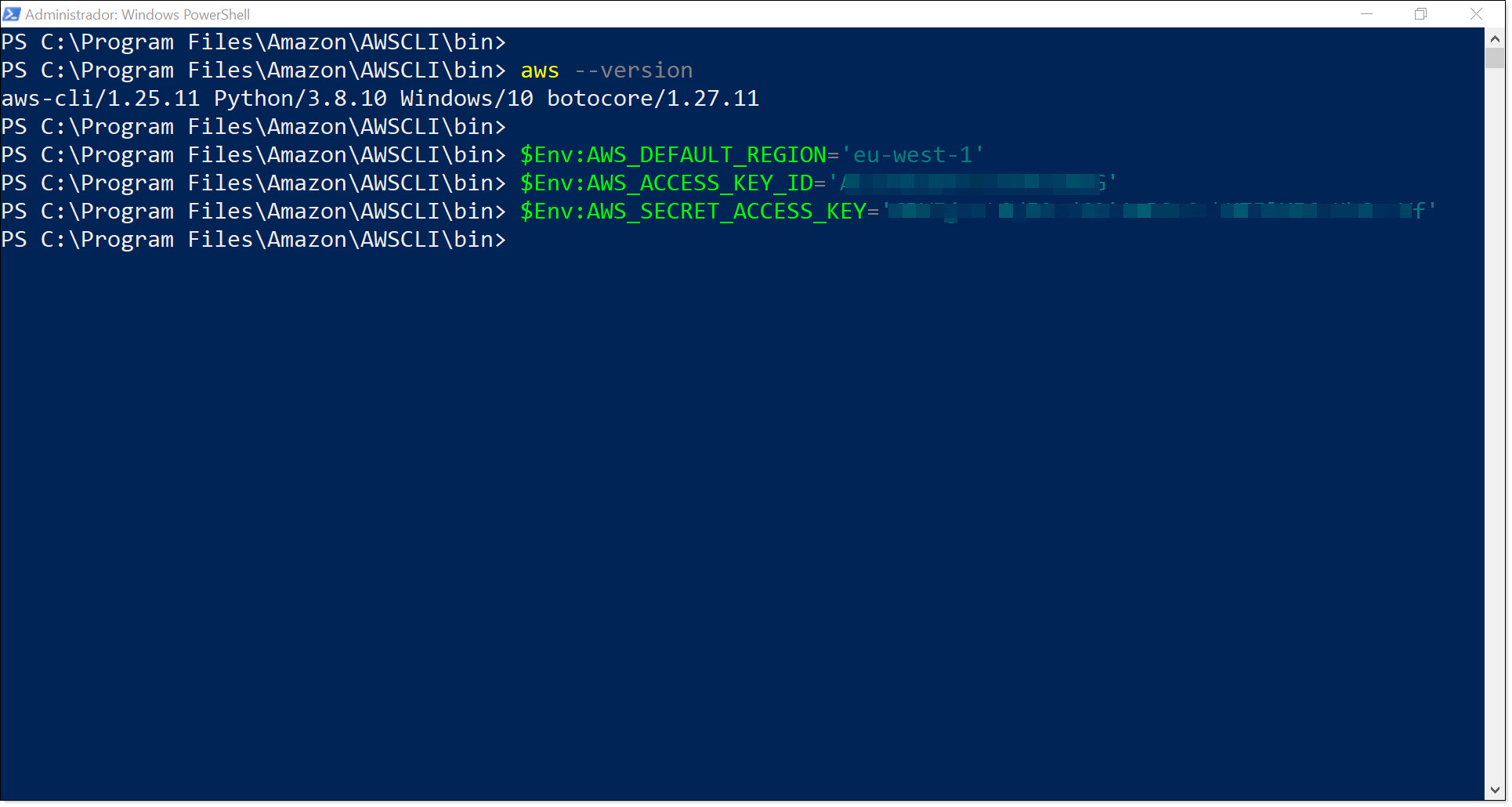

In PowerShell, you will need your system to be validated by AWS. This means you will need to load the environment variables, which are as follows:

- $Env:AWS_DEFAULT_REGION=’REGION_OF_THE_VM_AND_S3′

- $Env:AWS_ACCESS_KEY_ID=’ACCESS KEY’

- $Env:AWS_SECRET_ACCESS_KEY=’ SECRET_ACCESS_KEY’

Use the keys that you made a note of early to load these variables.

Here is an example of what these variables might look like:

- $Env:AWS_DEFAULT_REGION=’eu-west-1′

- $Env:AWS_ACCESS_KEY_ID=’BEGHC3T2OQ53AJHKIAZA’

- $Env:AWS_SECRET_ACCESS_KEY=’DGIjWLv0w/ad Ei1960e8ehUVfwRSTKhGrFlXE6rs’

You need to type these variables in the command prompt ().

$Env:AWS_DEFAULT_REGION=’eu-west-1′

$Env:AWS_ACCESS_KEY_ID=’BEGHC3T2OQ53AJHKIAZA’

$Env:AWS_SECRET_ACCESS_KEY=’DGIjWLv0w/ad Ei1960e8ehUVfwRSTKhGrFlXE6rs’

To check that these environment variables have been loaded correctly, run the command “echo” followed by the name of the variable. For example:

echo $Env:AWS_DEFAULT_REGION

echo $Env:AWS_ACCESS_KEY_ID

echo $Env:AWS_SECRET_ACCESS_KEY

Part 3 – Load the environment variables for the AWS connection

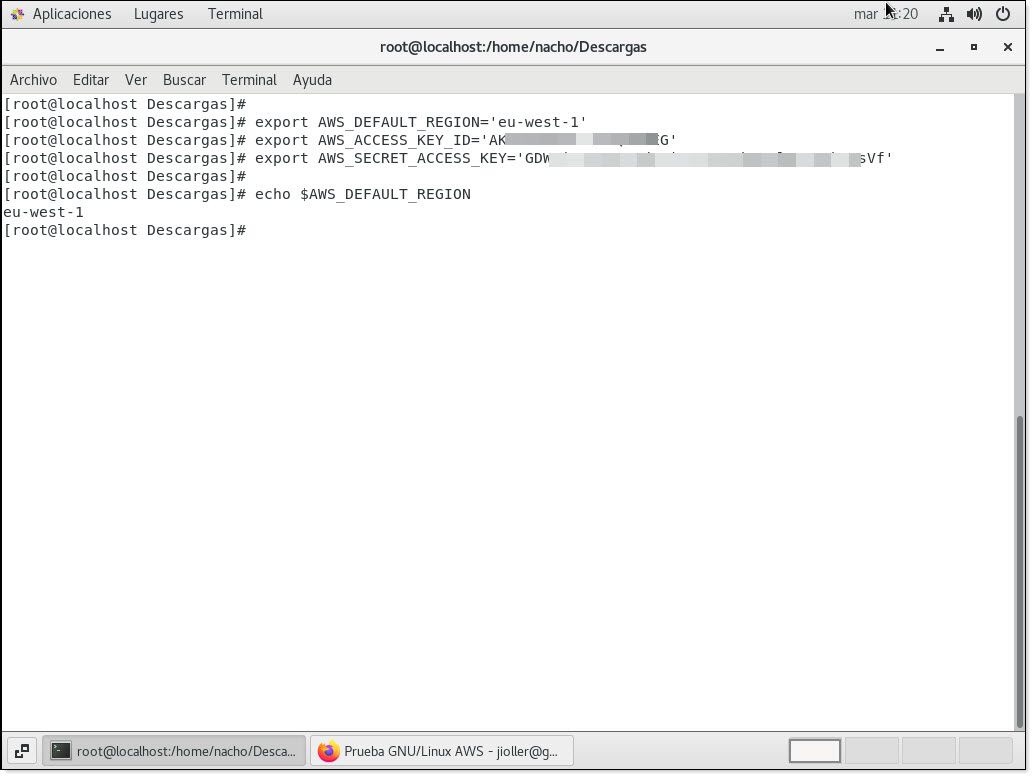

For GNU/Linux devices, you need to do this using the “export” command as follows:

export AWS_DEFAULT_REGION=’eu-west-1′

export AWS_ACCESS_KEY_ID=’BEGHC3T2OQ53AJHKIAZA’

export AWS_SECRET_ACCESS_KEY=’DGIjWLv0w/ad Ei1960e8ehUVfwRSTKhGrFlXE6rs’

You can check that the variables have been loaded correctly using the “echo” command with a $ symbol in front of the variable. For example:

echo $AWS_DEFAULT_REGION

And in this case, it will return the value “eu-west-1”.

Part 3 – Loading environment variables for the AWS connection using Centos 7

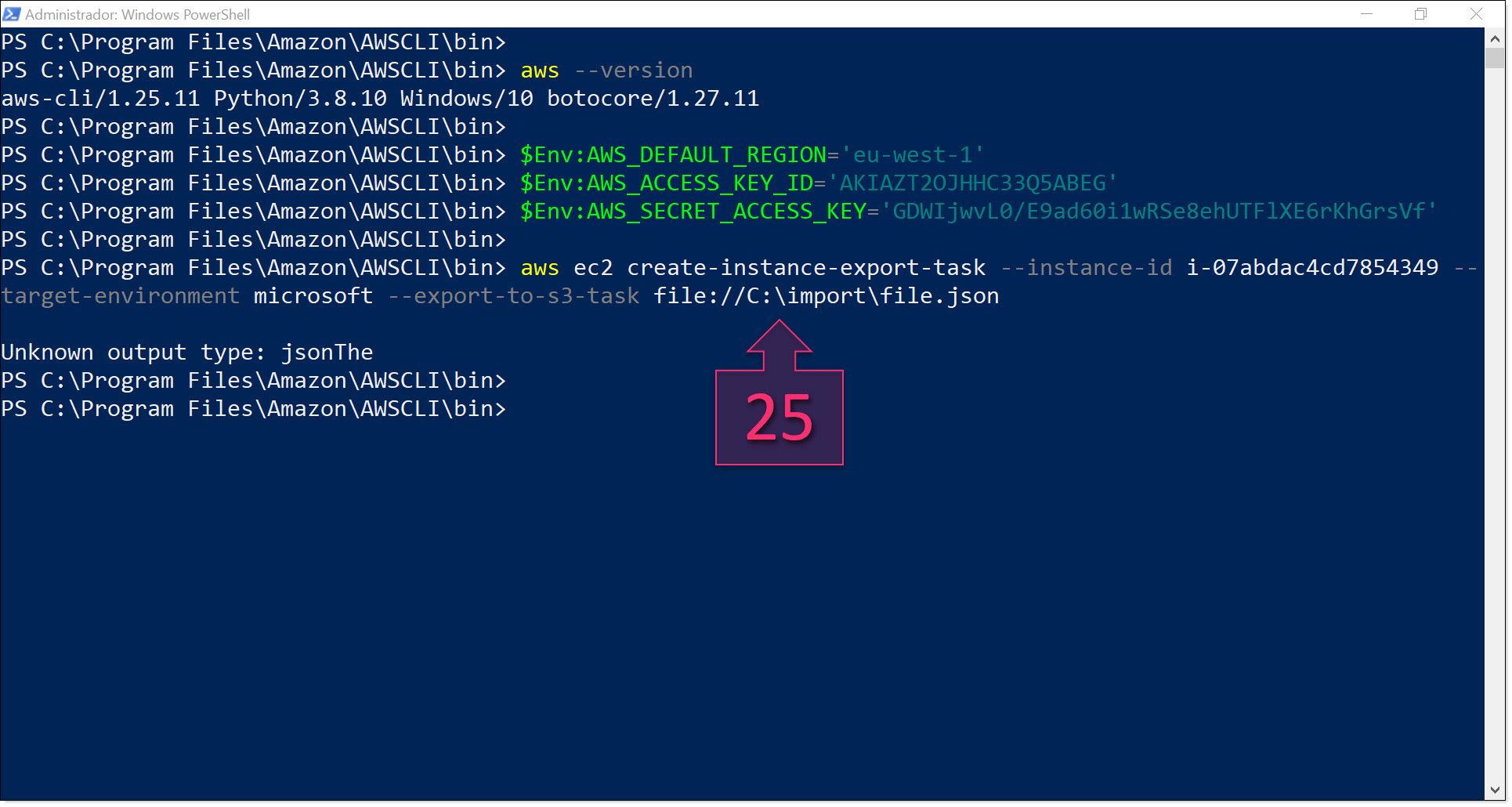

Next, run the command “aws ec2 create-instance-export-task” (25) to begin exporting the VM.

aws ec2 create-instance-export-task –instance-id <INSTANCE-ID> –target-environment microsoft –export-to-s3-task file://<FILE PATH>

Where:

- <INSTANCE-ID> is the instance ID that you noted down previously.

- <FILE PATH> is the path where the file “file.json” is located.

For example:

aws ec2 create-instance-export-task –instance-id i-whahahaha –target-environment microsoft –export-to-s3-task file://C:\import\file.json

Part 3 – Run the command to export your instance to your S3

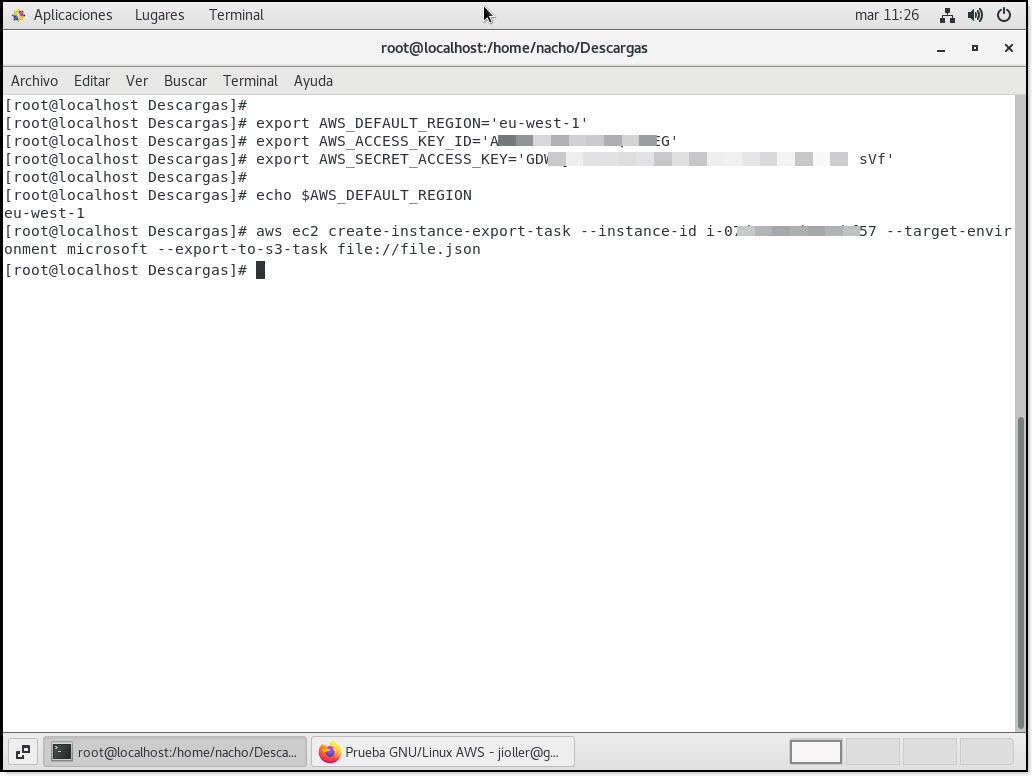

To do this using GNU/Linux, use the same syntax but changes the file path for “file.json” accordingly.

Part 3 – Launching the migration from Centos7

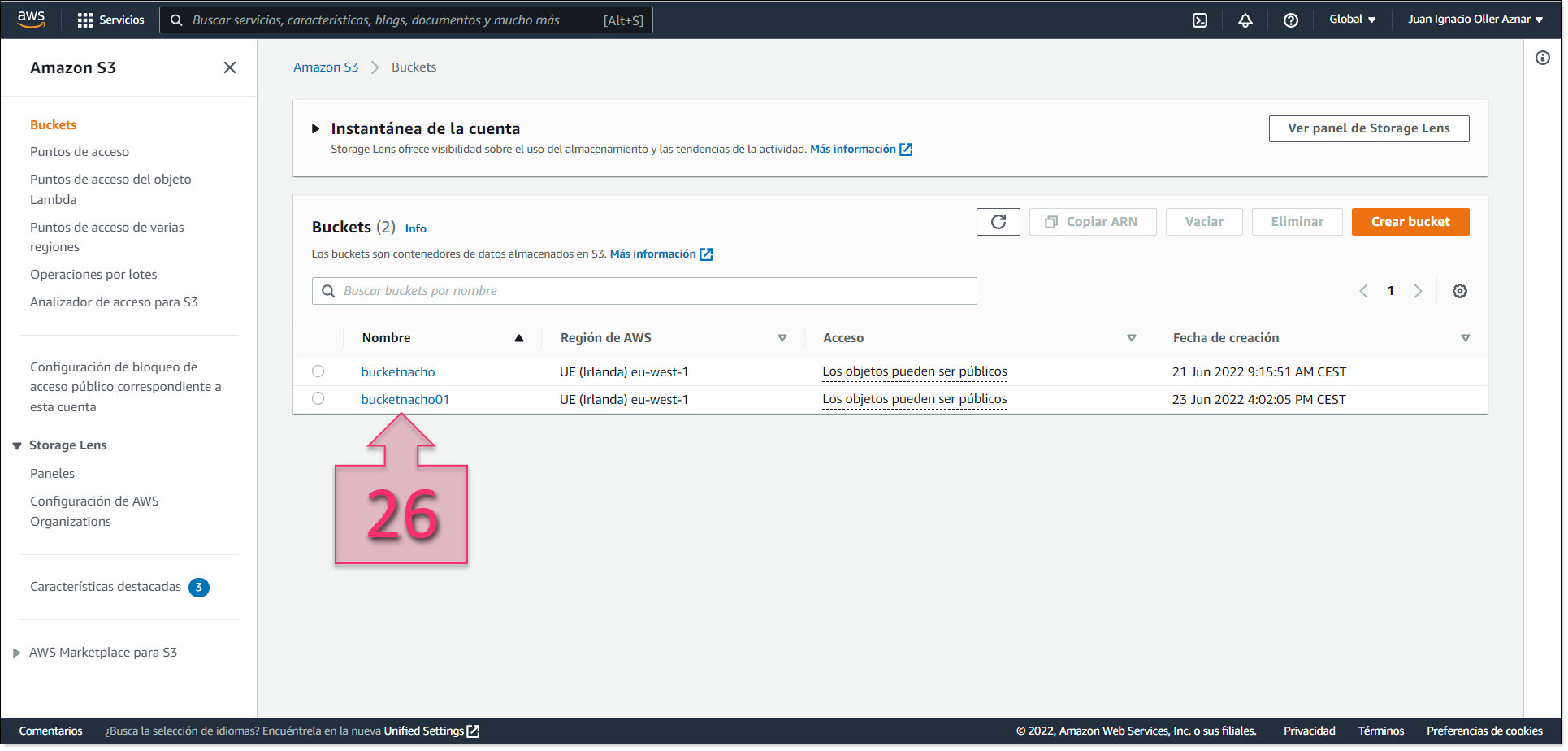

Next, you will need to check the S3 Bucket to see if the data has been copied correctly.

NOTE: The time required to complete this process can vary greatly depending on the size of the server that you want to export.

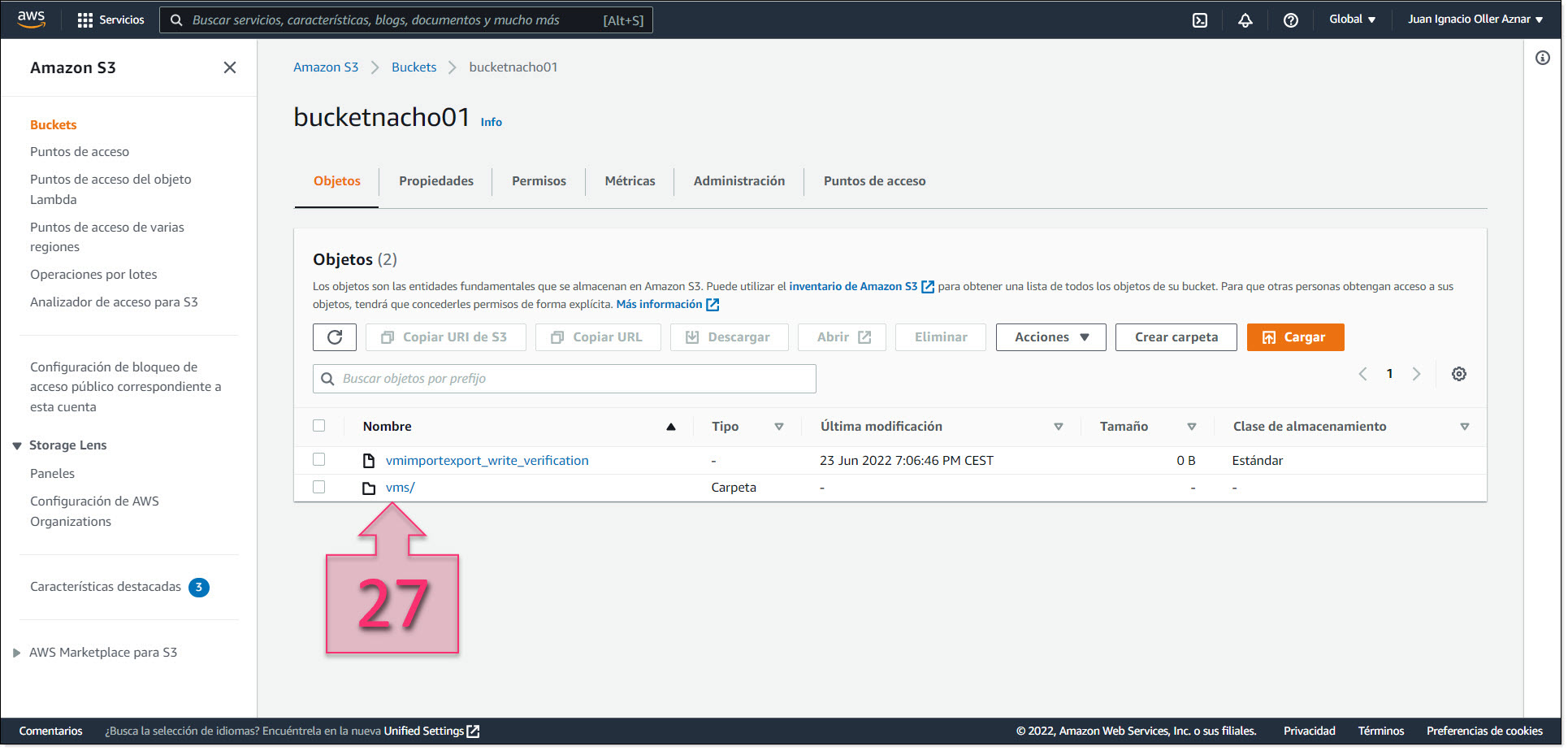

Once you have located the bucket where the VM has been exported to, click on the bucket name (26) to see its contents.

Part 3 – Access the bucket selected for the migration

Next, find the folder where the exported files have been saved (27), which were previously defined in the file “file.json”, which in this example was /vms”.

Part 3 – Find the folder where the exported files have been saved

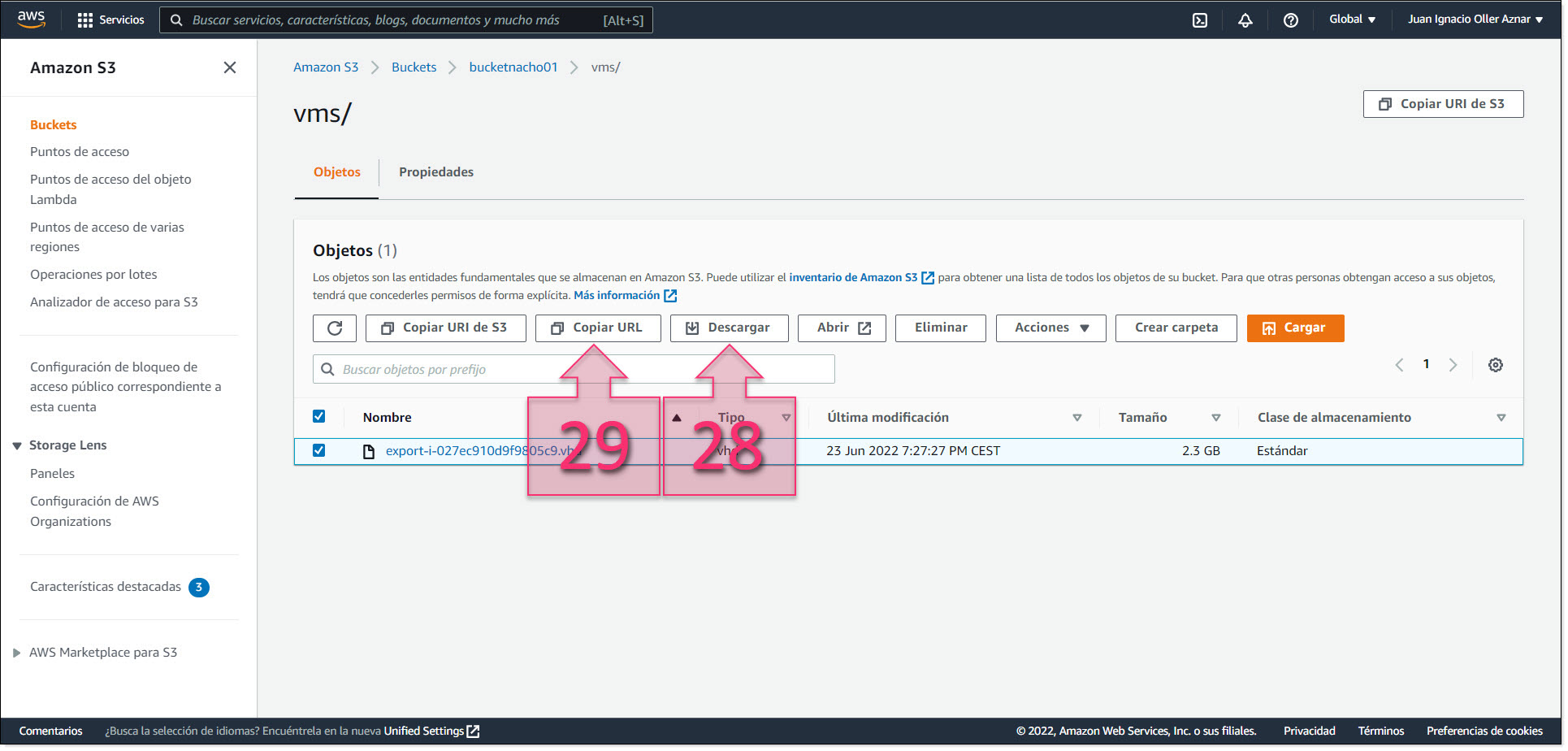

At this point, if everything has worked correctly, you will have two options, download the VHD directly (28) or copy the URL (29) to use in the next step to migrate it to Jotelulu.

Part 3 – Copy the download URL or download the VHD directly

You have now finished the part of the process that involves AWS.

Part 4 – Uploading your VM to Jotelulu

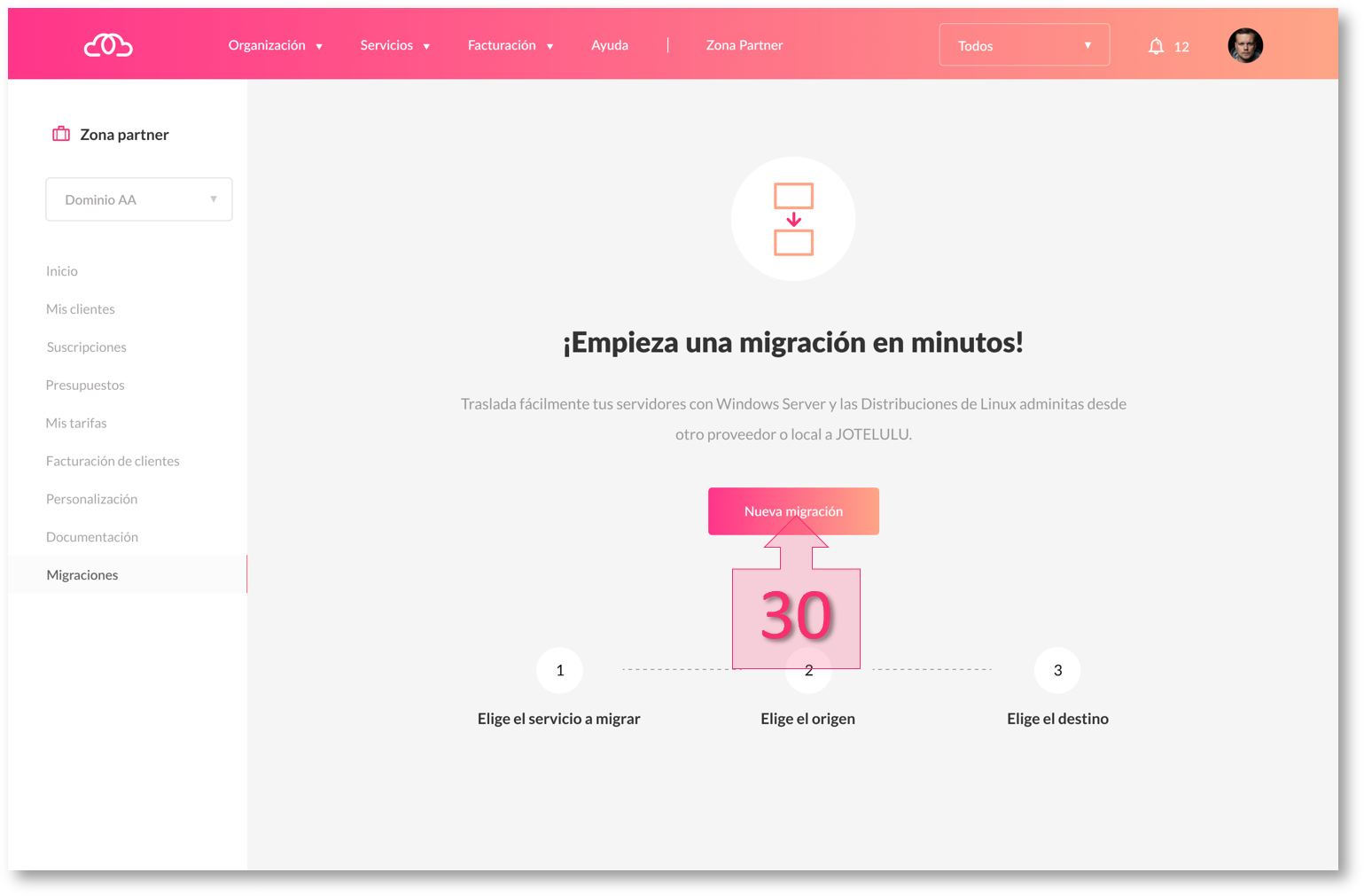

To begin migrating your VM to Jotelulu, first load the Jotelulu platform and access the Partner Area. From here, navigate to the Migration section and click on “New Migration” (30).

NOTE: This page may look different if you have already performed previous migrations, in which case, these will be listed here.

Part 4 – Launch a new migration from the Jotelulu platform

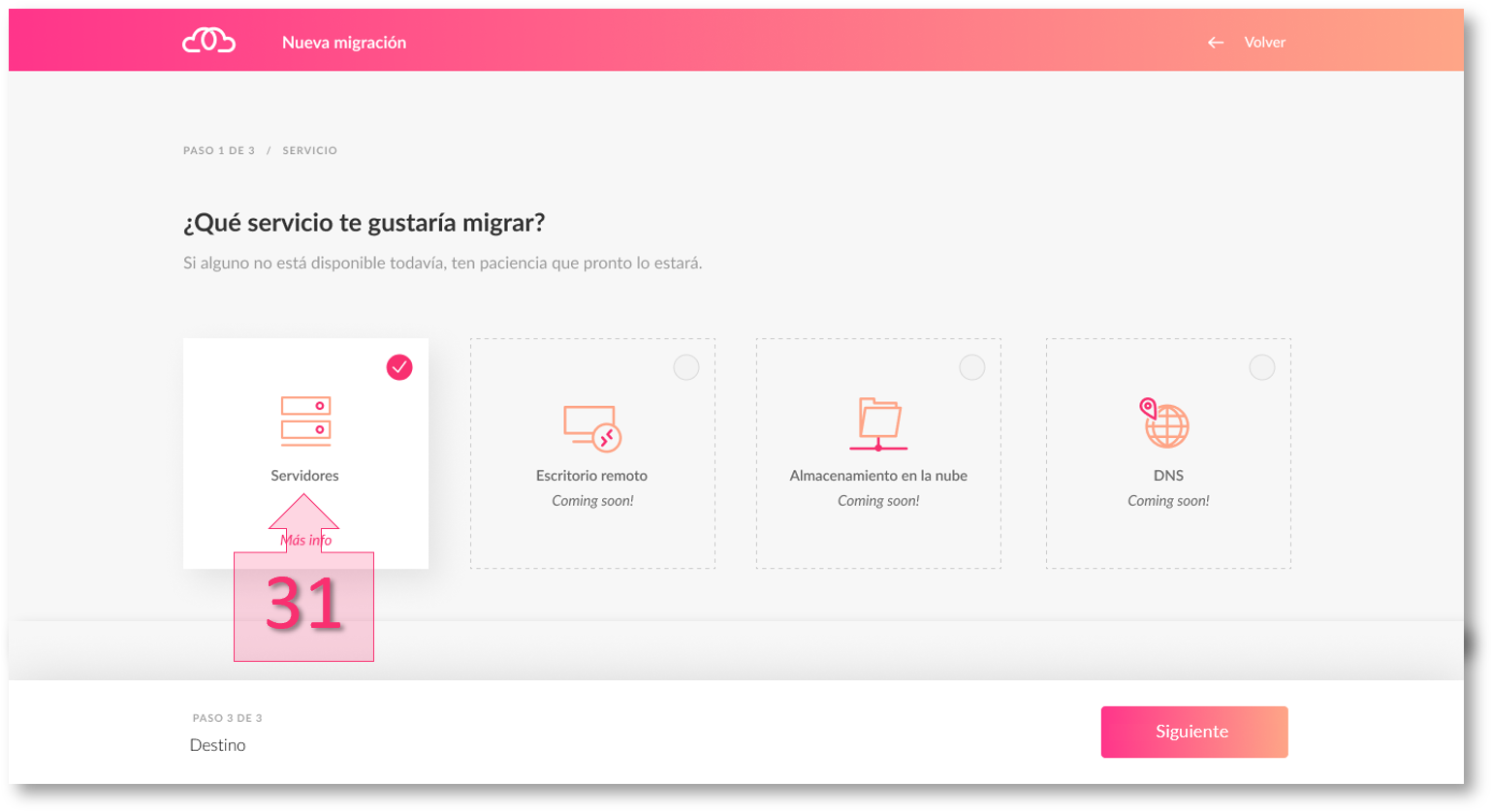

You will now be taken through a guided process to configure the system to migrate your server to Jotelulu.

First, you will be asked to choose the type of service to migrate, which in this case, will be Servers. Click on “Servers” from the options shown (31).

Part 4 – Select Servers from the menu

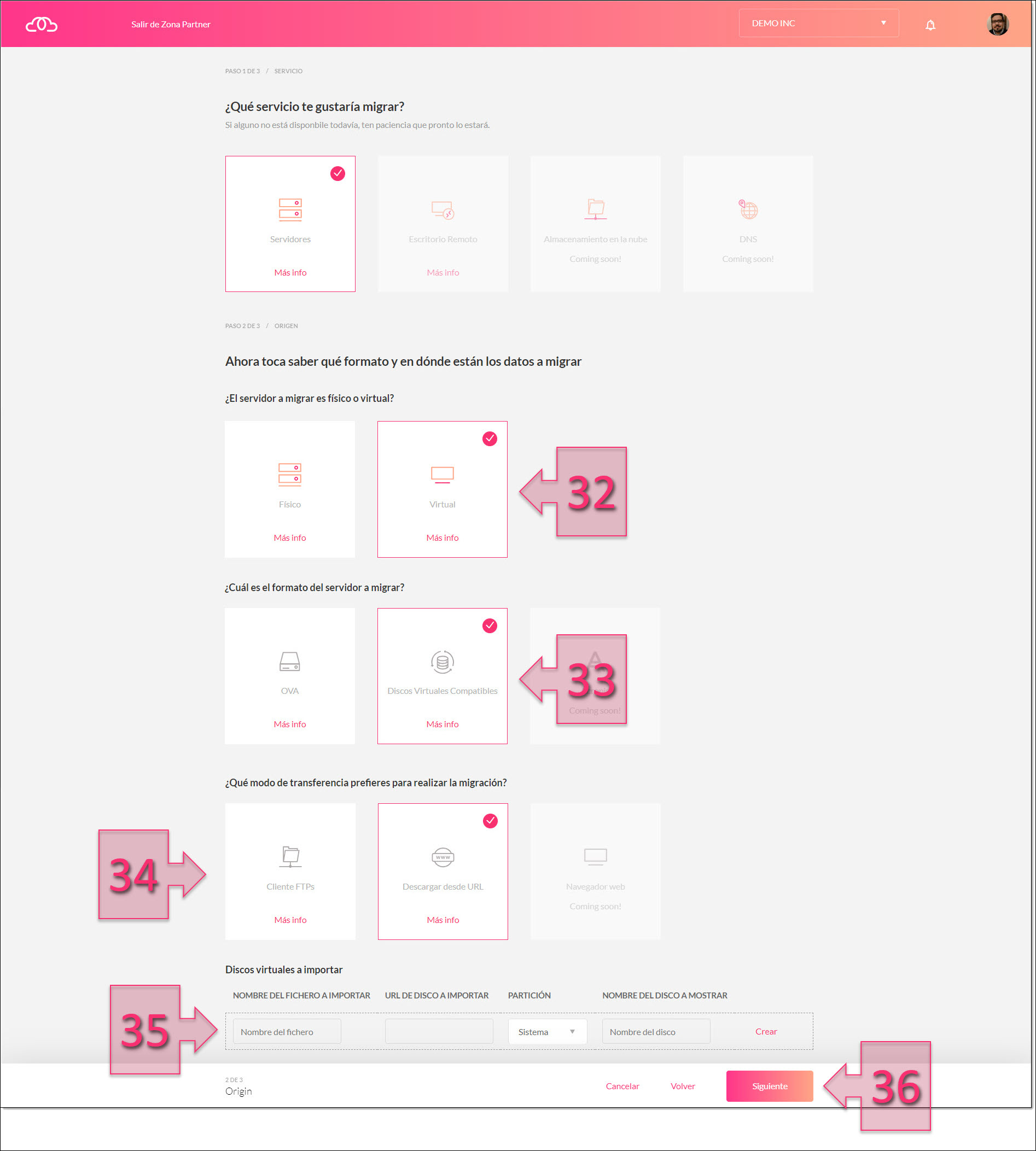

Next, you need to select whether the server is physical or virtual. In this case, you need to click on “Virtual” (32).

The next option asks you to select the form in which the disc will be imported. Select “Compatible Virtual Disks” (33) as this is the format the AWS provides.

Next, you need to specify how the virtual disk will be transferred to Jotelulu. Here, you have two choices. You can either use FTP or a URL. For this tutorial, we will choose “Download using a URL” (34) as it is the faster method and requires less effort on the part of the customer.

NOTE: When you perform the migration using a URL, you will have to use the same device that you used to export the VM from AWS.

Then, you need to enter the details of the Virtual Disks to import (35). You will need to enter a file name, which must include the extension (defined in Part 3) and the URL of the disk to import, which is provided by the bucket in Part 3. You will also need to specify whether it is a system disk or a data disk, and lastly, you will need to give a disk display name, which is how the server will be identified on the platform after the migration.

Once you have entered these details, click on “Next” (36) to save your changes and proceed.

Part 4 – Choose the file format and location

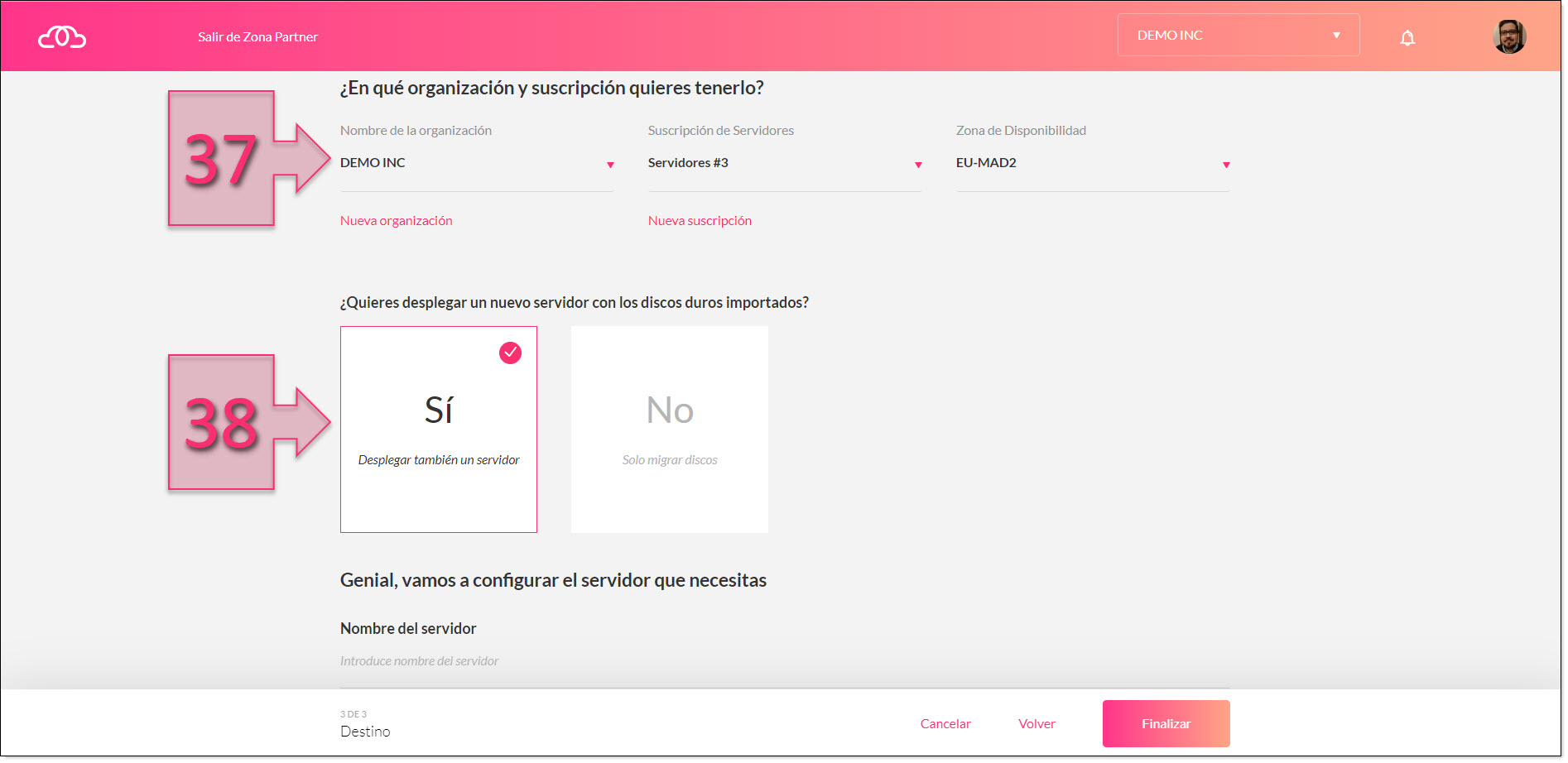

You will also have to enter details relating to the organisation, subscription and availability zone (37) that you want to import the new disk to. This will depend on the organisations and subscriptions that you have registered.

The last part of this process asks “Do you want to deploy a new server with the imported disks?” (38) If you do not wish to deploy the server, you can simply leave the uploaded image ready for future use. However, usually, the answer to this question will be “Yes”.

Part 4 – Select the organisation, subscription and availability zone

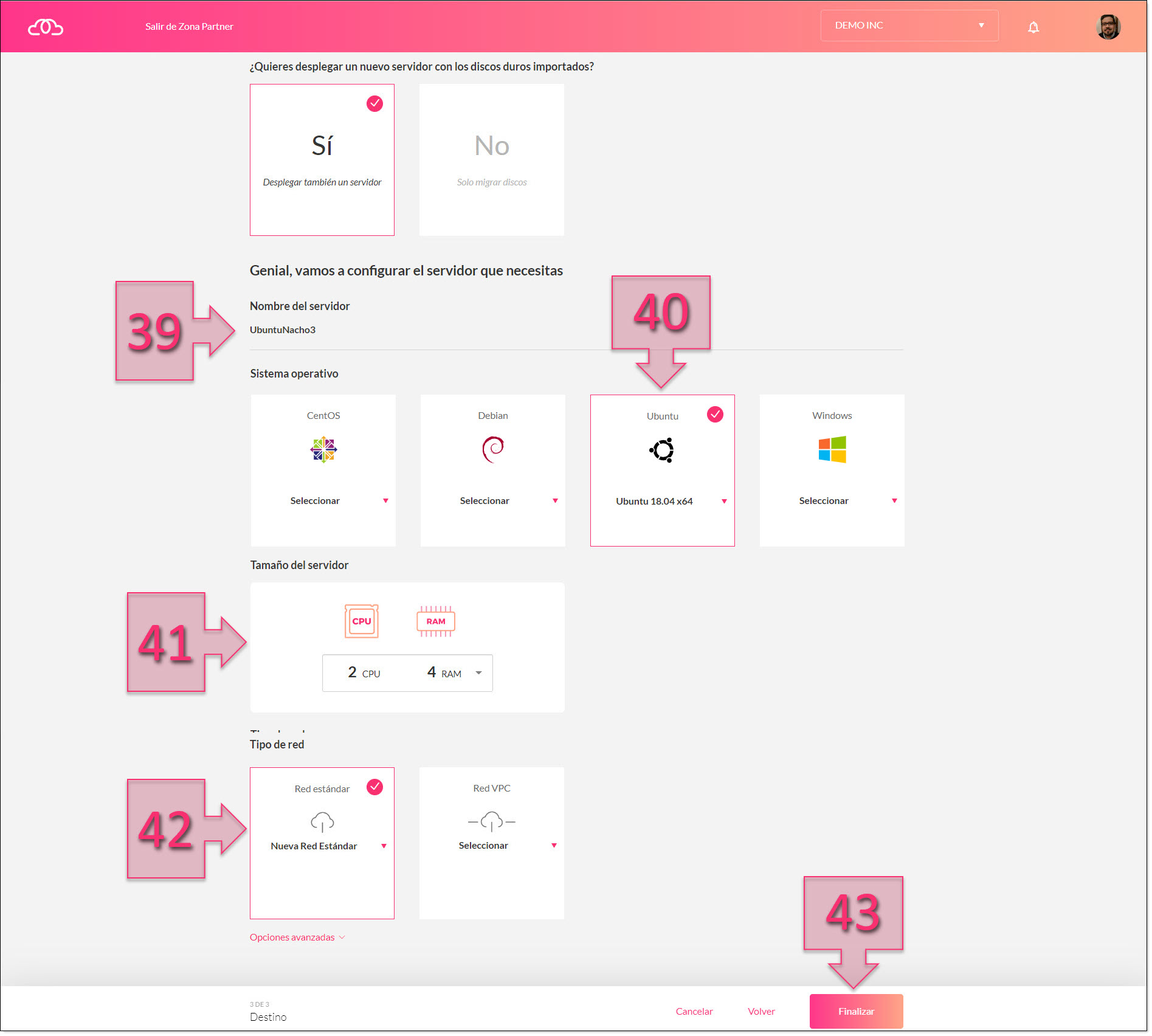

If you click on “Yes”, you will be then asked for a series of details in order to configure your new Jotelulu server.

First, you will have to give the server a name (39). This name can include spaces.

Next, you will have to select an operating system (40).

Then, you will have to select a size for your new server (41), where you will specify the CPU and RAM that you need.

Lastly, you will need to select a network type (42), depending on whether you need a standard network or a VPC.

Once you have done all this, click on “Finish” (43).

Part 4 – Configuring your new server

Once you finish this step, you will have configured your migration and a series of internal processes will run to deploy your new Jotelulu server, which will be available in your Servers subscription area.

Conclusions and next steps:

Migrating servers between clouds can be complex, but at Jotelulu, we have tried to design our processes and tools to make things much simpler and more comfortable for our users.

As you have seen in this tutorial, migrating a server from AWS to Jotelulu does not need to be a headache, and we offer a complete guided process through our platform to help you. This way, you can move your data onto our infrastructure relatively easily. However, if you do encounter any problems, don’t hesitate to contact us so that we can help you.

If you found this tutorial useful and would like to learn more about the migration process in particular, the following tutorials may also be of interest to you:

- Migration Tool Quick Start Guide

- How to Migrate a Server from VMWare to Jotelulu.

- How to Migrate an On-premises Windows Server to Jotelulu.

- How to Migrate an On-premises GNU/Linux Server to Jotelulu.

- How to Migrate a Virtual Machine from MS Azure to Jotelulu.

- How to Migrate a Server from Hyper-V to Jotelulu.

Welcome to Jotelulu!