In this tutorial, you’ll learn how to deploy a load balancer on your Servers subscription to improve stability and prevent possible service interruptions caused by overloading the server.

The aim of this feature is to share the workload across the different servers on your subscription. Obviously, then, you’ll need at least two in order to follow this tutorial.

With this simple tutorial, you’ll see how easy it is to set up a load balancer on your subscription.

How to Deploy a Load Balancer

Before you start

To successfully complete this tutorial and deploy a load balancer, you will need:

- To be registered with an organisation on the Jotelulu platform and to have logged in.

- To have administrator permissions for the entire organisation and its users.

- To have at least 2 servers deployed on the same Servers subscription.

Part 1 – Navigate to the Load Balancers Section on the Jotelulu Platform

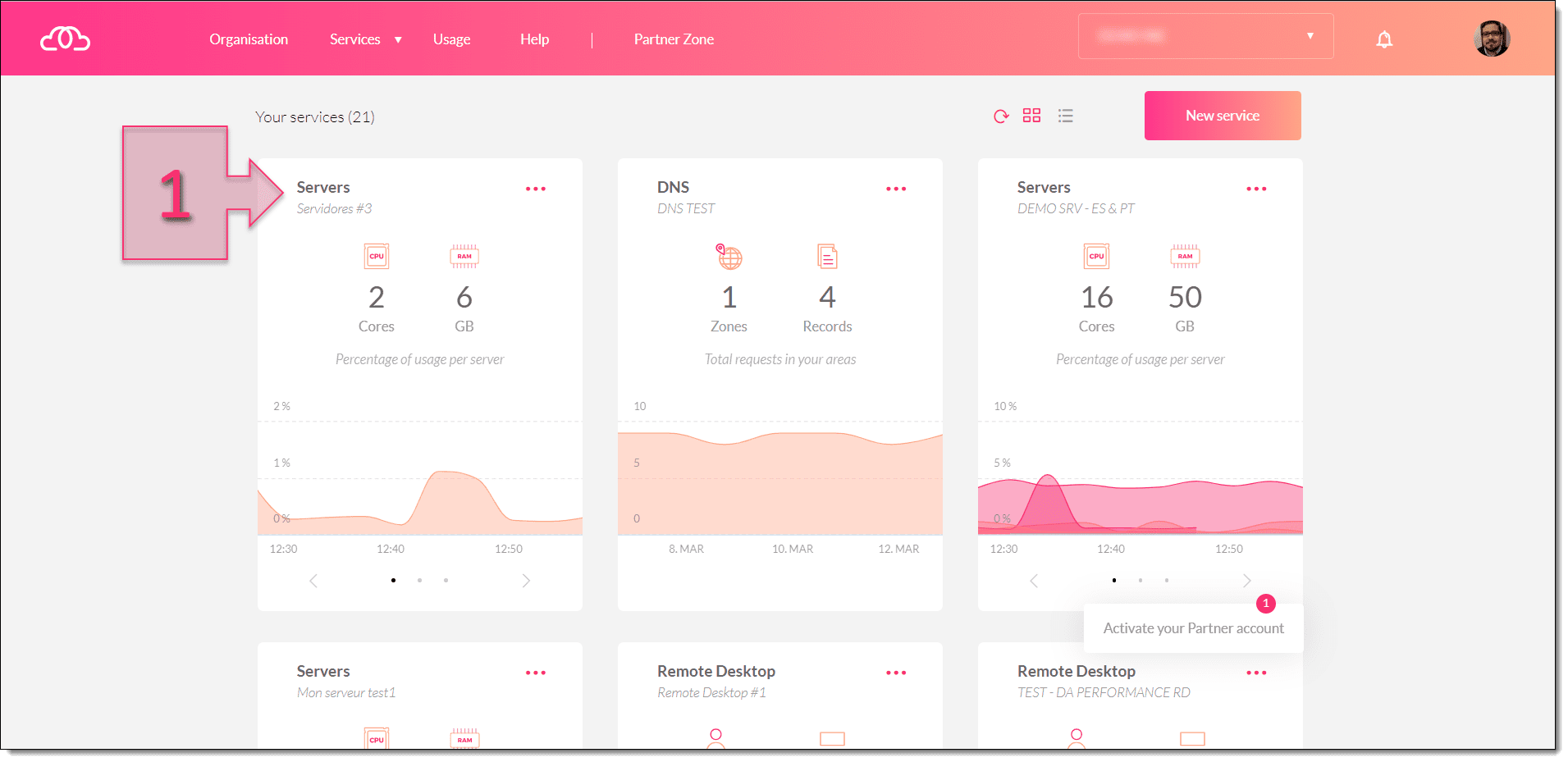

Starting from the initial Dashboard page, open your Servers subscription (1) by clicking on the corresponding card.

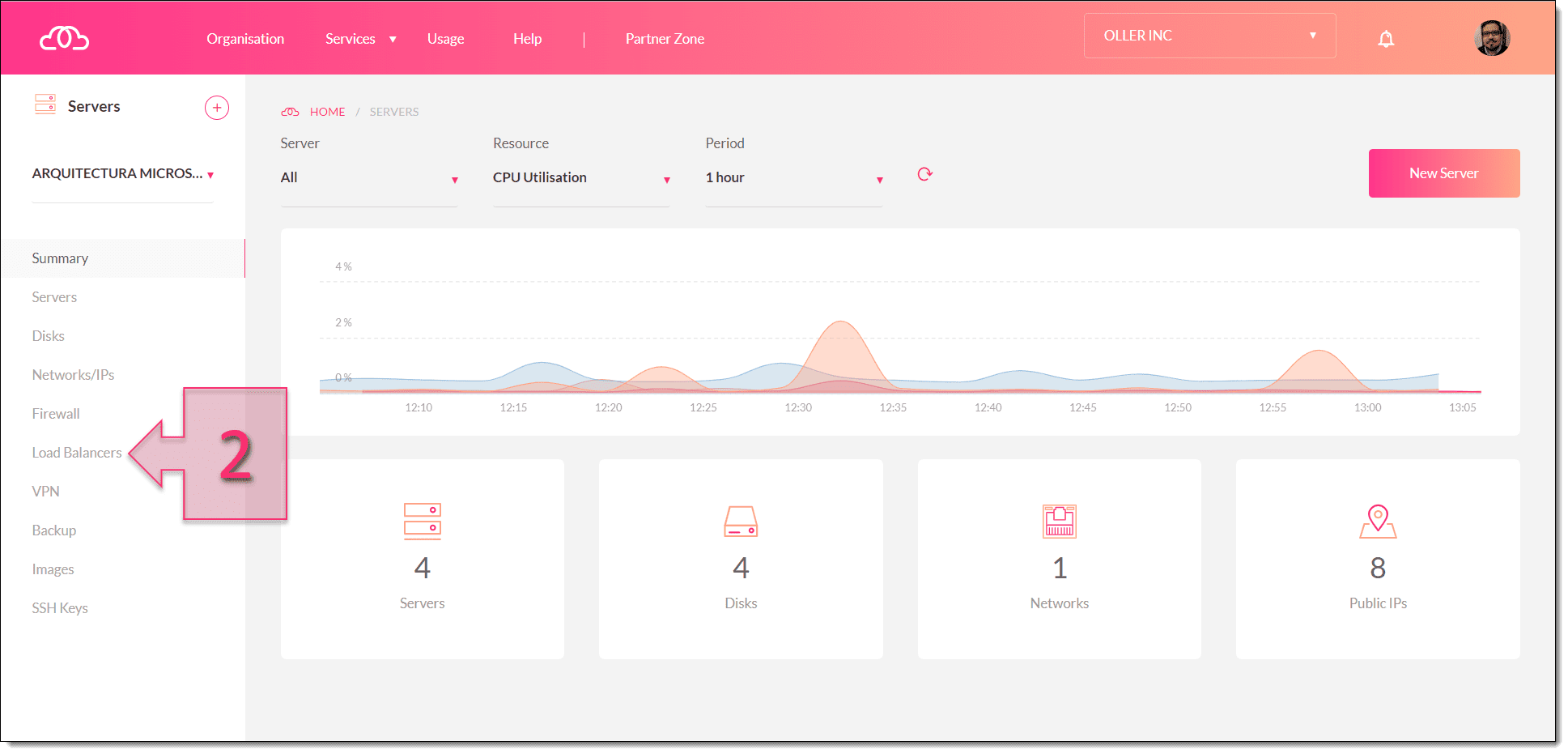

Next, click on “Load Balancers” in the left-hand menu (2).

NOTE: You do not need to have any servers deployed when creating Load Balancer rules. You can create them and add them later.

Part 2 – Configuring the Load Balancer

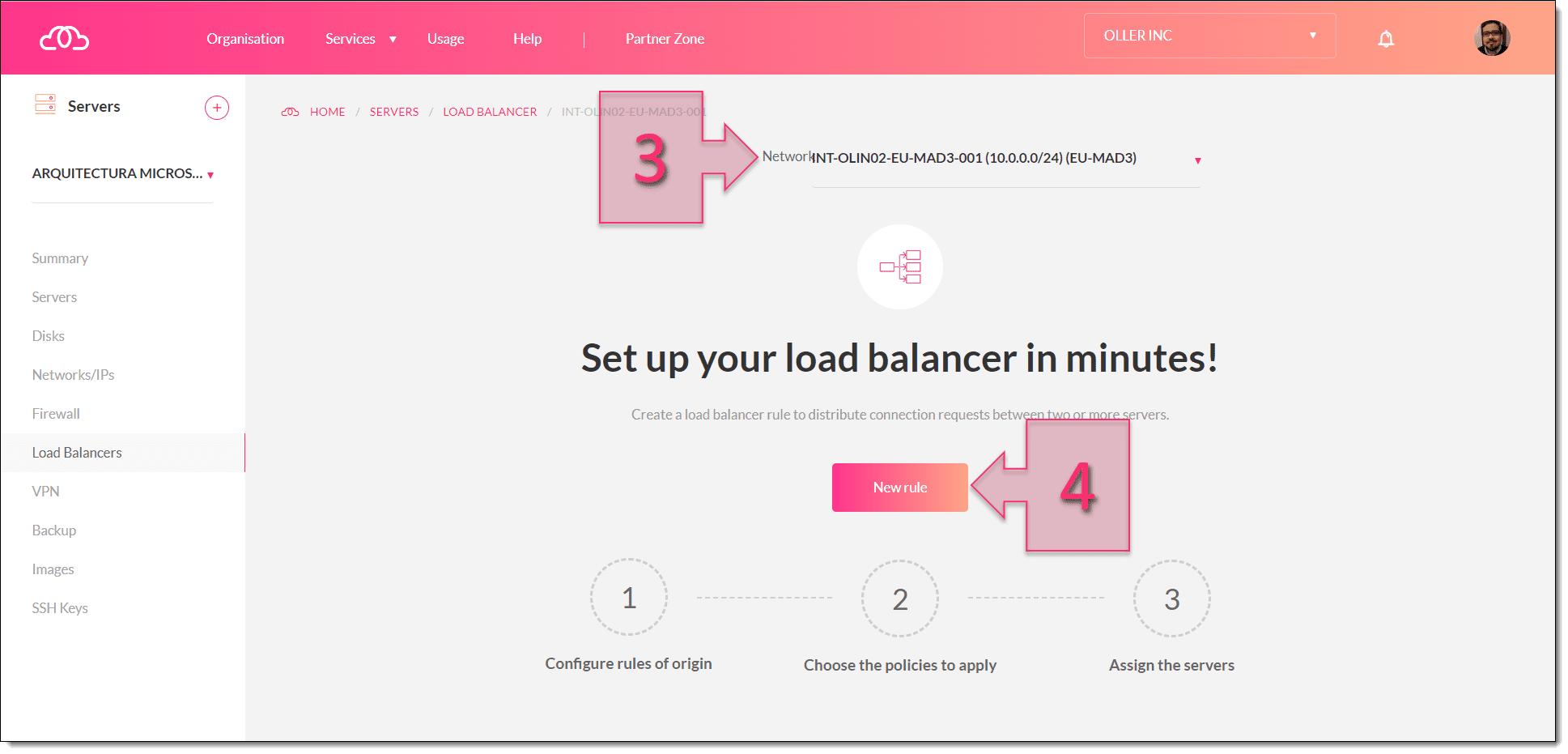

Once you have opened the Load Balancers section, you will see one of two different pages depending on your circumstances:

- Case 1: If you haven’t deployed any load balancers before, you’ll see the page shown in the image below.

- Case 2: If you have already deployed load balancers previously, you’ll see a list of rules.

For the purposes of this tutorial, we’ll assume that you haven’t yet set up any load balancer rules. In this case, you need to select the network on which you’re going to create the rule (3) and then click on “New Rule” (4).

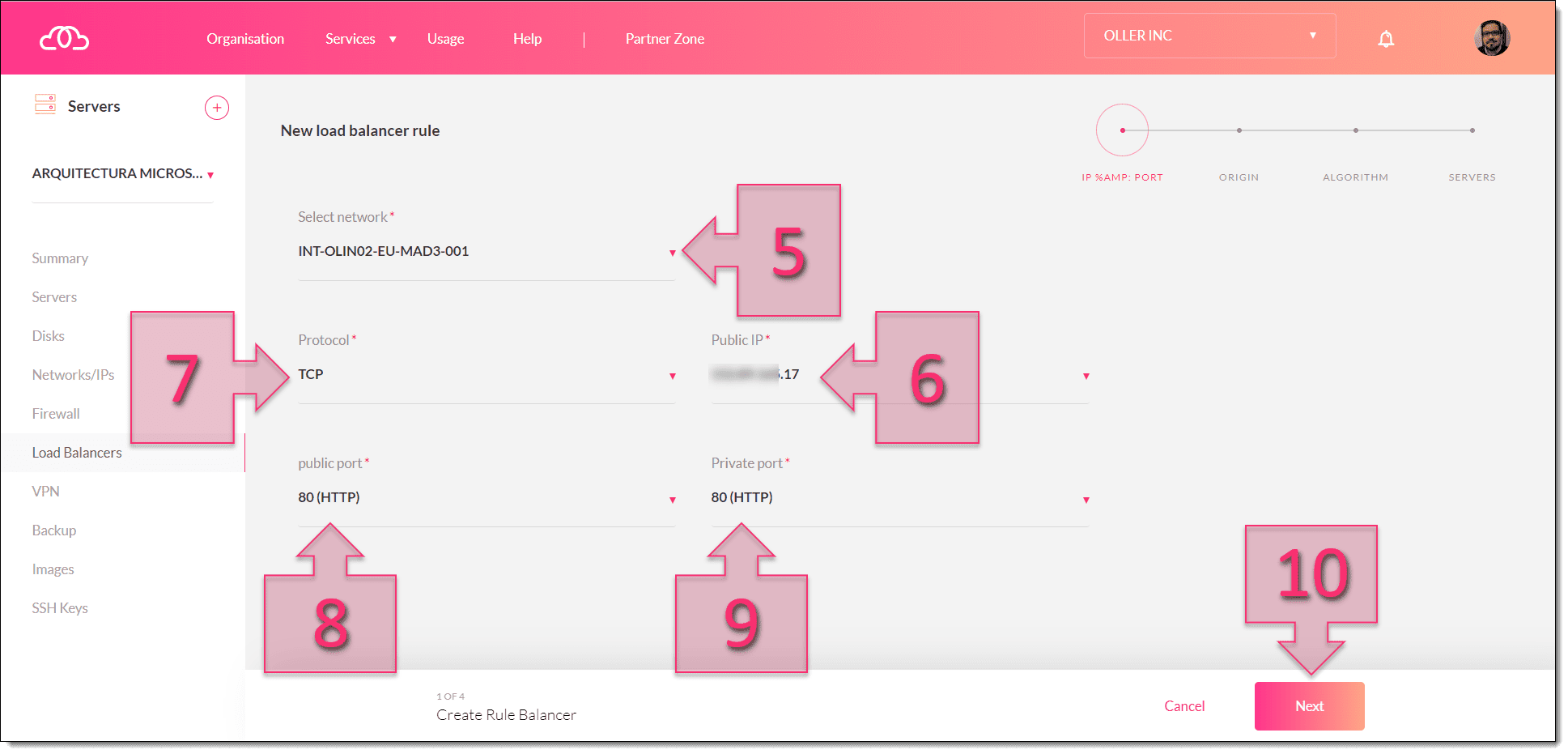

When creating a new rule, the first thing you will need to do is select that network that the new rule will apply to. You can do this by selecting from the drop-down list under “Select network” (5).

Depending on the network you’ve selected, you’ll then see a list of IP addresses from which to choose a Public IP address (6).

Next, you need to select a Protocol (7) from either TCP or UDP. Then, you need to select the Public Port (8) that will listen to requests from the internet and select a Private Port (9) that external requests will be forwarded to.

For the Public Port, you have the option to use port 80 (http), port 443 (https) or enter a new port.

NOTE: It’s important to remember that a port can only be assigned to a single use on the same network. This means you can’t assign two rules to the same port on the same network space.

Once you have entered these settings, click on Next (10).

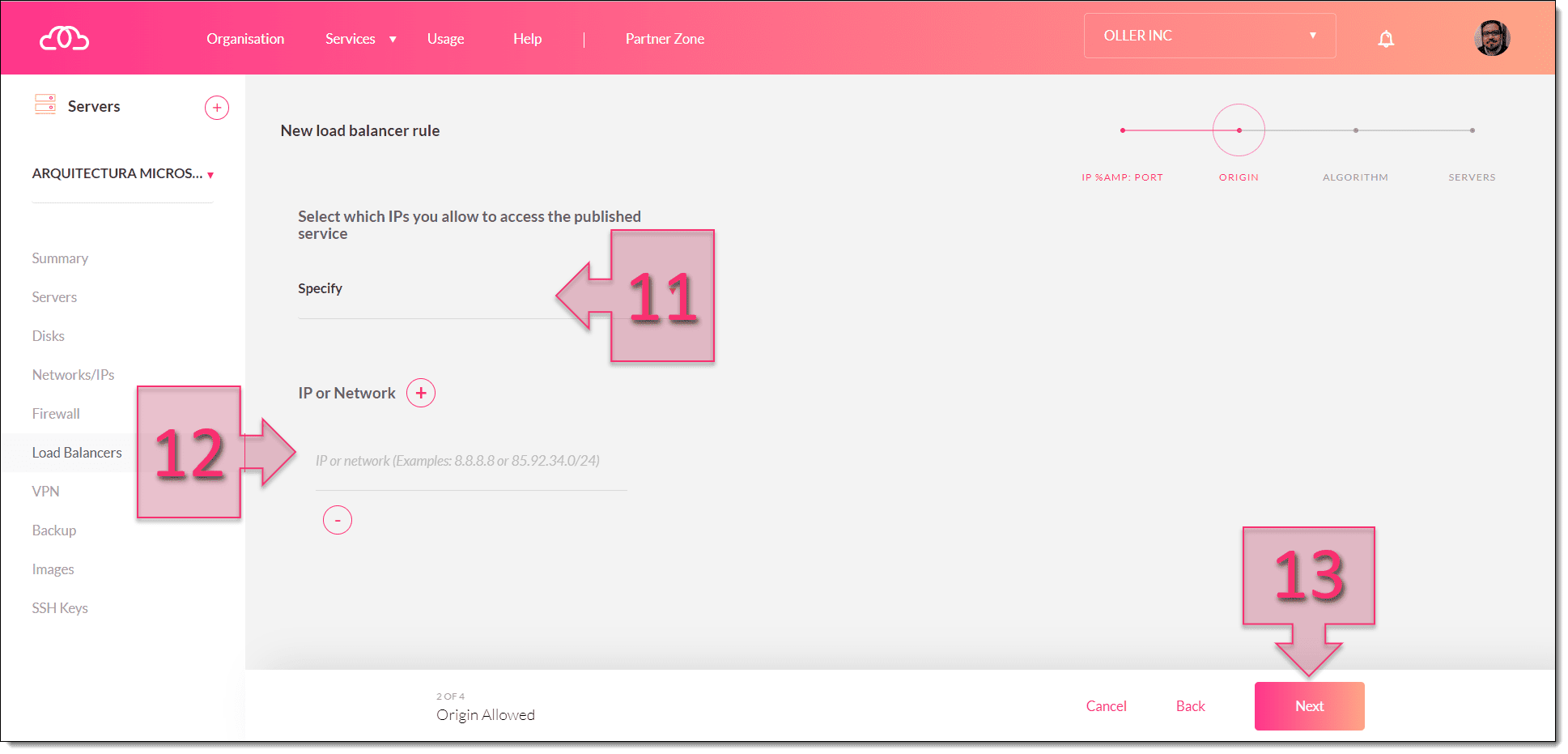

On the next page, you’ll need to specify the external IP addresses that will have access to the service that you offer. Here, you have two options:

- Any: Allows access from any network. This is the least-secure option.

- Specify: Only allows access for previously specified networks.

If you select “Specify” (11), you will then need to provide an IP address or range that will have access (12). You can add multiple addresses or ranges.

Once you’ve assigned the IP addresses or ranges that will have access to your services, click on “Next” (13).

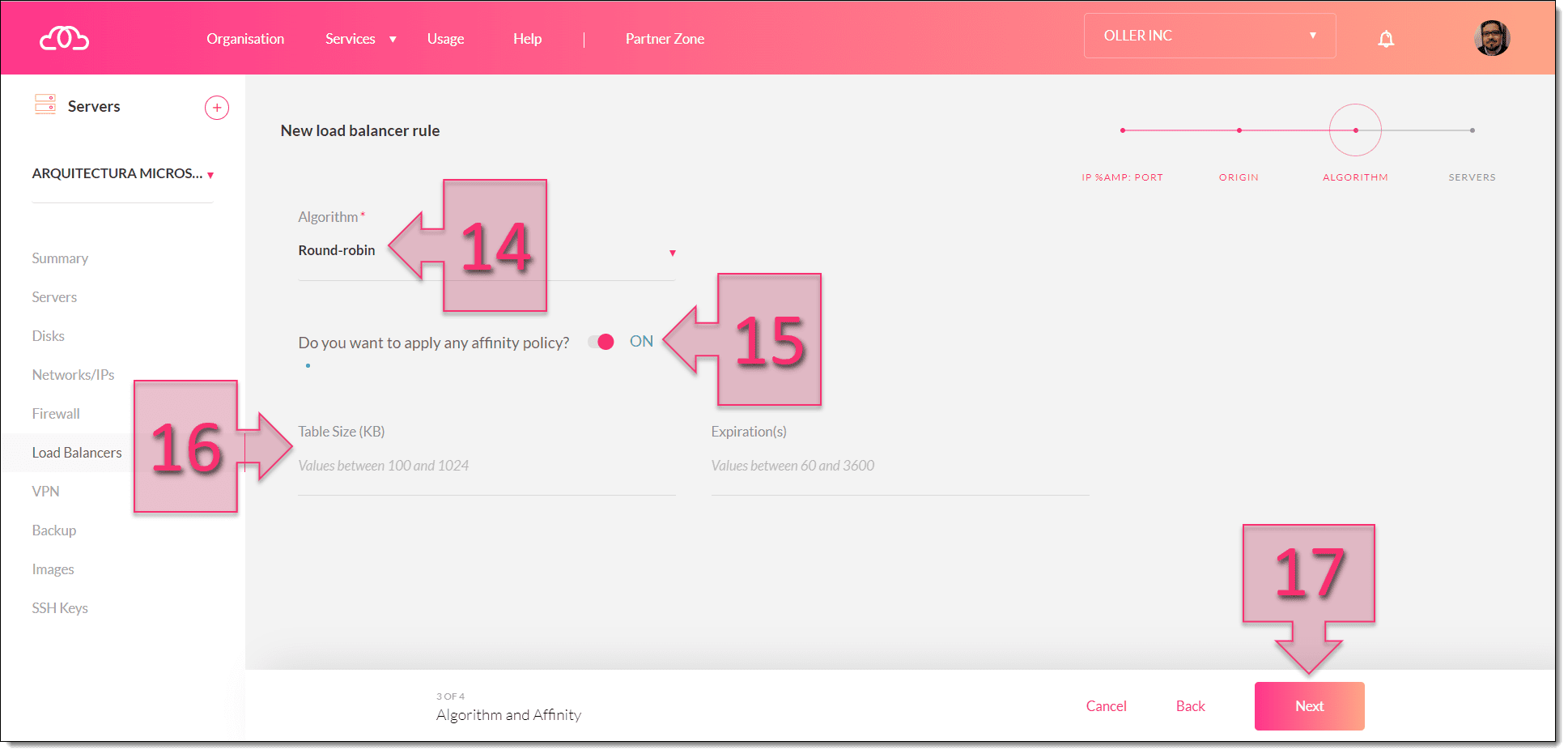

Next, you need to assign the algorithm (14) that will be used to assign requests to the servers providing the service.

At this point, you can choose from the following algorithms:

- Round Robin: Requests are assigned in a circular manner, sending the first request to the first server, the second request to the second server, and so on. It is the most commonly used option.

- Least connections: Requests are assigned to the server with the least connections to avoid one server becoming overloaded while another might not be doing anything at all.

- Source: Requests are assigned based on their origin.

You will also need to select whether or not to apply an affinity policy (15) using the toggle switch.

If you choose to apply an affinity policy, you’ll need to provide the following details (16):

- Table size (KB).

- Expiration(s).

Once you have entered all these settings, click on “Next” (17).

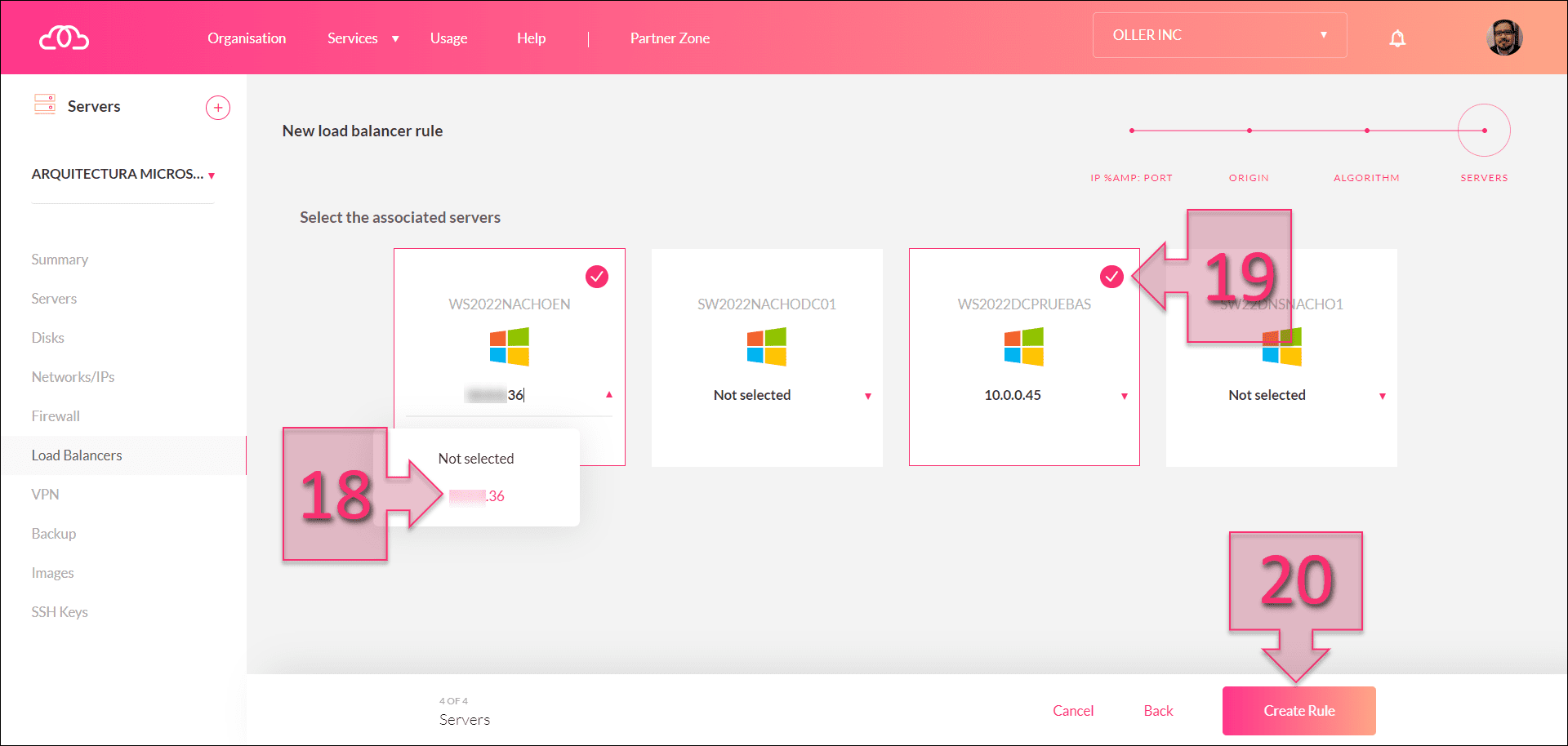

The next step is to select the machines that you want to form part of the server pool. These are the servers that the load balancer will send requests to.

To do this, simply click on a server (18), click on “Not selected” and choose an IP address.

When you select a machine to be included in the server pool, you will see a tick mark next to it (19).

Once you have selected all the servers you want, click on “Create Rule” (20).

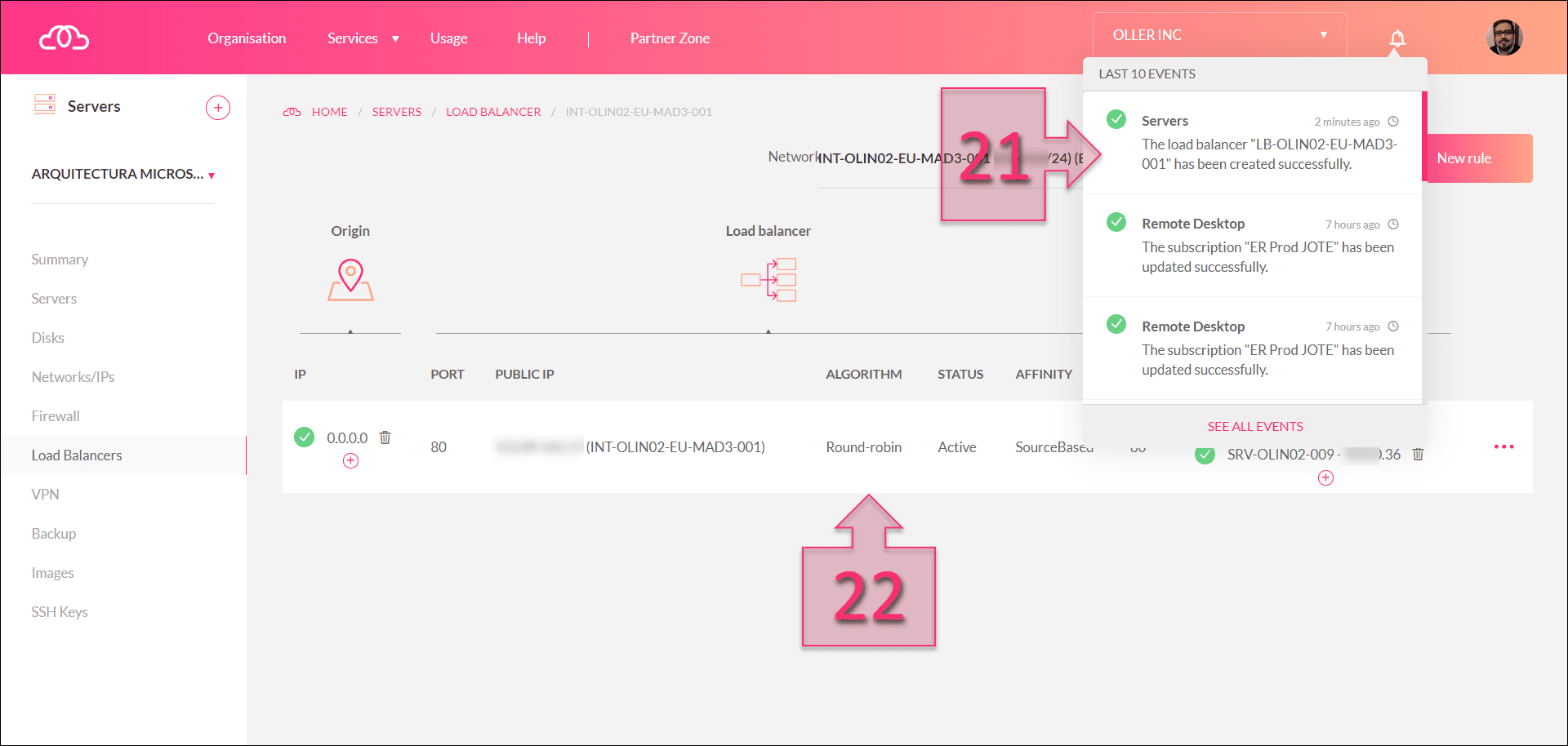

At this point, you’ll see a message saying “Servers: Creating Load Balancer <Network_Name>”, where <Network_Name> is the network that you chose at the beginning of the process.

Once the rule has been created, you’ll see the message “Servers: The Load Balancer <Network_Name> has been created successfully” (21).

You’ll also be able to see the new rule in the list (22), although you might need to refresh the page to see it.

You have now successfully deployed a load balancer on your Jotelulu Servers subscription.

Summary

Working with load balancers allows you to share the workload across multiple servers to prevent service interruptions and optimise work queues. This means a more reliable and stable service for your customers. As you can see in this tutorial, just like other Jotelulu products and services, it’s really easy to set up.

We hope that this tutorial contains everything you need to get started with load balancers. However, if you do have any questions or have any issues, simply get in touch with our team by writing to platform@jotelulu.com or calling +34 911 333 710, and we will help you.

Thanks for choosing Jotelulu!